(I have another post showing how to use .NET to extract Doc Attach files: https://dynamicsgpland.blogspot.com/2017/05/extract-and-save-dynamics-gp-document.html)

Today I was asked if there was a way to read an image file from the Dynamics GP Document Attach table so that the image can be added to a report. For instance, suppose a customer wants to display item images on an invoice.

I have done very little work with the blob / varbinary field type in SQL Server, so I didn't know how difficult it would be to do this. I quickly did some research and testing, and while I don't have a complete solution for inserting images onto a report, I did test one method for extracting files from the Dynamics GP Document Attach table and saving them to disk.

From what I could tell, there are at least three standard SQL Server tools/commands that you can use to extract an image or file from a varbinary field.

1. OLE Automation Query – This looks pretty sketchy and appears to be very poorly documented. I tried some samples and couldn’t get it to work.

2. CLR (.NET) inside of SQL – Appears to be a viable option, but requires enabling CLR on SQL, which I personally would try to avoid on a GP SQL Server if possible, so I didn't try this one yet

3. BCP – I was able to get this to work and it was surprisingly easy, but I don’t know how easy it will be to integrate into a report process

Since I was able to quickly get the BCP option to work, here are the commands I used. If you are able to shell out to run BCP as part of a report or other process, this should work.

For my testing, I attached a text file and a JPG image to a customer record, and was able to use these BCP commands to successfully extract both files and save them to disk.

When I ran the BCP command, it asked me four questions, and then prompted me to save those settings to a format file. I accepted all of the default options except for the header length—that needs to be set to 0, and then saved the file as the default bcp.fmt.

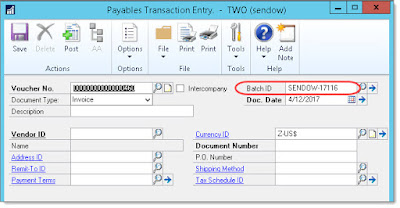

BCP "SELECT BinaryBlob FROM TWO..coAttachmentItems WHERE Attachment_ID = '88d9e324-4d52-41fe-a3ff-6b3753aee6b4'" queryout "C:\Temp\DocAttach\TestAttach.txt" -T -f bcp.fmt

BCP "SELECT BinaryBlob FROM TWO..coAttachmentItems WHERE Attachment_ID = '43e1099f-4e2b-46c7-9f1c-a155ece489fa'" queryout "C:\Temp\DocAttach\TestImage.jpg" -T -f bcp.fmt

I found that BCP was able to extract the files from the Document Attach table and save them, and I was able to then immediately open and view the files. Dynamics GP does not appear to be compressing or otherwise modifying the files before saving them to the varbinary field, so no additional decoding is required.

Given how simple BCP is and how well it seems to work, I would probably opt for this approach over using .NET, either inside SQL or outside of SQL. But if you are already developing a .NET app, then it's probably better to use .NET code to perform this extraction.

Steve Endow is a Microsoft MVP

for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles.

He is the owner of Precipio Services, which provides Dynamics GP

integrations, customizations, and automation solutions.