My blog has moved! Please visit the new blog at: https://blog.steveendow.com/ I will no longer be posting to Dynamics GP Land, and all new posts will be at https://blog.steveendow.com Thanks!

Wednesday, July 28, 2010

I'm Hungry. For GP.

As soon as I heard about the book, I got in touch with the good folks at Packt Publishing and pleaded for a review copy as soon as it was available.

Unfortunately, I was a little bit ambitious. Between the in-laws visiting from China and my wife getting ready to have our second child in the next few weeks while my two year old demands more Dora,things have been hectic, to say the least. (Insane, perhaps?) I received the book, all shiny and crisp, but it had to sit on my desk for a few weeks until my sanity levels returned to normal.

So, finally, at 4:30am this morning, after I dropped off the in laws to go on a Las Vegas + Grand Canyon tour, I figured it was the perfect, and rare quiet opportunity to finally give The Cookbook the time that it deserved.

First, the "Cookbook" title is excellent, as it is written from the perspective of the user who wants to use GP. It isn't a dry or tedious reference manual that needs to be studied and digested (pun!). It's a book with great suggestions on actually using GP, getting the most out of GP, and pointing out those features that many users never knew existed, but would make their lives so much easier.

For example, have you configured My Reports? Or are you still wasting time navigating through the Reports menu while taking a call on your rotary phone?

And did you know that there is a way to fix AutoComplete errors without having to clear all of the AutoComplete entries? Seriously? I just learned about that from The Cookbook, and honestly I'm a bit embarrassed. With all of the repetitive entry I have to do for testing, and with all of the mistakes I make doing that data entry, I need this feature!

Hmmm, do you know how to quickly and fully create new inventory items without having to go into the 5 or 6 windows required to fully setup a new item? Page 69 has step by step instructions on how and when to use this feature that, trust me, will save a lot of time.

Are you wasting time waiting for data to export from SmartLists into Excel? Pages 138 - 154 offer three great ways to bypass SmartLists and get your data directly into Excel, which is especially powerful for users who regularly need to extract thousands of records out of GP. I've had clients that regularly exported over 100,000 records from GP for analysis in Excel, so whenever I see someone try and export more than 1,000 records, I definitely recommend one or two of the approaches Mark has laid out in detail in his Cookbook (and I just learned about a new one!).

And to wrap up my early morning review, I see that The Cookbook dutifully covers how to use the Reconcile to GL feature. I can't count the number of times I've received calls from clients who discovered that their subledgers don't tie to their trial balance. They don't know where to start, or understand how to perform the subledger reconciliation in GP. This is a process that every consultant or accounting manager should be familiar with.

Even if you think you know everything about GP (in which case you are fooling yourself), this book at a minimum reminds you of many features and functions that you may have forgotten or not used in a while. Collectively, the valuable and concise content on the 297 pages will provide value to you and your organization.

If you have invested in Dynamics GP, you've already spent thousands, or tens of thousands of dollars in software, consulting, and training. And I'll bet that the Microsoft Dynamics GP 2010 Cookbook will humble you once you realize how many useful features there are in GP that you haven't bothered to use or that you never even knew about. It's well worth the investment and should be standard issue for any consultant or GP customer.

In fact, for you consultants out there, it would be a great gift for your clients, and a great excuse to visit them and have a conversation to discuss if they are using GP as efficiently and effectively as possible.

Hats off to Mark for putting together such a valuable handbook for the GP community!

Tuesday, July 27, 2010

Code Review: Catching Unhandled Exceptions in .NET

I recently deployed three eConnect 9 imports at a client site, just like I've done with dozens and dozens of .NET eConnect integrations.

The client uses Citrix, and has two load balanced application servers for Dynamics GP. To install my three imports, we had to install them on both of the Citrix servers. The installs went fine, the apps all appeared to work well, and I thought my work was done.

Until the key business user launches one of the imports and gets an error. The app doesn't launch, no windows appear, she just sees a "crash" error message.

After further testing, we find that the app launches fine on Citrix server #1, but gives her the error on Citrix server #2. Exact same app.

I then check Event Viewer and see some arcane error messages related to the application crash. They are basically generic .NET errors that don't provide any guidance as to the cause of the crash.

EventType clr20r3, P1 invoiceimport.exe, P2 1.0.2.0, P3 4c374d29, P4 mscorlib, P5 2.0.0.0....

I find out that these are very generic .NET errors that are occurring because I do not have an error handler at the very beginning of my application code. After further research, I learn about "Unhandled Exceptions". Although it is possible to handle most exceptions with a Try Catch block in the main program code, there is also a dedicated Unhandled Exception event handler.

My current interpretation is that this is one way to handle exceptions for situations where you don't have a Try Catch block, or where an error occurs during initialization, such as referencing a DLL or external assembly.

static void Main()

{

AppDomain.CurrentDomain.UnhandledException += new UnhandledExceptionEventHandler(CurrentDomain_UnhandledException);

Application.SetCompatibleTextRenderingDefault(false);

Application.Run(new MainForm());

}

static void CurrentDomain_UnhandledException(object sender, UnhandledExceptionEventArgs e)

{

Exception ex = (Exception)e.ExceptionObject;

MessageBox.Show("An unexpected error (unhandled exception) occured trying to launch " + Properties.Settings.Default.appName + ": \r\n\r\n" + ex.InnerException.ToString());

}

So in my case, the goal was to at least return some type of detail about the cause of the crash. After installing the new version with the UnhandledException handler, we received this message.

Certainly better than the event viewer message, but still pretty cryptic. Since I have become more familiar with the .NET exception messages, I knew to start at the top and work my way down, or just look at the InnerException.

So I focused on the System.Drawing.Icon.Initialize error. My apps are basic business apps and aren't doing anything special with icons, so this didn't mean much to me. After quite a few Google searches, I stumbled across an MSDN Forum post that described the same error. The resolution in that case was to replace the icon on a window.

Seriously? Since all three of the applications used the same icon, and since the exact same app worked on the other Citrix server, I was pretty doubtful that replacing the icon would solve the problem. But it's a simple fix to try. I removed the icon from all of the forms in my application, saved and closed Visual Studio, then recreated the icon file using an icon editor, and then added the icon back to the forms. I then recompiled the app.

Today we installed the new version of the import, and voila, it worked.

It still doesn't make any sense to me, but it is nice to be able to resolve such a random and obscure error for once.

401k Match, Make Sure You Get Your Money!

Take the example of a 50% match up to 5%. The employer matches 50% of the employee's contribution up to 5% of their gross wages. To accomplish this, you will need to set up both a deduction (to capture the employee contribution) and benefit (to capture the employer match).

First, set up the deduction (Microsoft Dynamics GP>>Tools>>Setup>>Payroll>>Deduction). It is important that you configure the deduction with a "Percent of Gross Wages" calculation method. And don't forget to set the annual maximums as applicable.

Next, set up the benefit (Microsoft Dynamics GP>>Tools>>Setup>>Payroll>>Benefit). Here is where the setup gets a bit tricky. First, you will want to set the calculation method to "Percent of Deduction" and enter the match percent (in our example, 50%). Then, in the bottom left hand corner make sure the Based On field is set to "Deduction". Set the radio button to "Selected" and then insert the deduction code you set up previously. Enter the 5% match maximum in the Employer Maximum field. Viola! You are done.

A few notes:

- You must use the Percent of Deduction method on the benefit for the Employer Maximum field to be available.

- For the Employer Maximum to work, the benefit must be based on a deduction that uses the "Percent of Gross Wages" calculation method.

- Remember to limit the Based On for the benefit to only the 401(k) deduction.

- Remember to include both the deduction and benefit in your payroll build.

Hope this helps clarify the setup for anyone needing 401(k) match tracking in GP. Feel to post your own tips, tricks, or questions.

Happy set up!

Christina

Thursday, July 22, 2010

Lesson in Code Re-Use: Vendor Item Number Error Importing POP Reciepts Using eConnect 9

Nothing to see here folks, please move along...

I've spent the last puzzling hour trying to figure out why I am seeing strange errors with a POP Receipt import that I've developed using eConnect 9.

When importing a particular receipt, eConnect returns an error saying that the vendor item number was invalid.

Okay, no problem, I added some validation to check the vendor ID, item number, and vendor item number of the receipt data so that a validation error would be logged in case there was a discrepancy.

But after adding my validation, the receipt data validated successfully, sent the receipt on to eConnect, and eConnect once again returned the same vendor item number error.

Thinking that my validation code was somehow being skipped, I added detailed logging to try and see how the validation code was being skipped. To my surprise, I saw that it was not being skipped. It was running, validating the receipt, and the data appeared to be valid.

Validating receipt 234376027 Item 5948-267764

Validating vendor item for PO PO100585, Item 5948-267764, Vendor Item D81639D

--Vendor item IS valid

I then logged into GP and checked the PO, and sure enough, the PO showed the same item number and the same vendor item number.

Puzzled, I then went back to the eConnect error. After looking at it more closely, I saw the oddity.

Error Number = 9343 Stored Procedure taPopRcptLineInsert Error Description = Item/vendor item/vendor combination is not available to receive from the PO Line

POPTYPE = 1

POPRCTNM = 234376027

PONUMBER = PO100585

ITEMNMBR = 5948-267764

VNDITNUM = 296059816397

VENDORID = GPINGRAM

Notice anything fishy?

Notice how the vendor item number in the eConnect error data does not match the vendor item number that I validated?

I then looked up the vendor item number record in GP, and sure enough, it showed 296059816397, and not D81639D.

From what I can tell at this point,

In GP 9, I am able to manually enter the receipt fine by using the vendor item number from the PO, so this is obviously a problem with my code!

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Speedy Speedy Payroll Entry

Friday, July 16, 2010

Code Review: .NET Coding Tip

I often have classes that perform routine, discrete functions, such as getting preformatted dates, running regex routines, or querying SQL Server. In the routine that my friend saw, I was using a standard data access class that I use for querying a GP database.

The following two lines create an instance of the "GP" class, and then call the ExecuteScalar method. But he noticed that the GP instance was only being called once in my routine.

He showed me the following syntax:

With this approach, you instantiate the class and call the method in one line. Pretty slick.

This reminds me somewhat of how I sometimes add SQL a parameter directly to a command object and assign the value all in the same line.

These types of tricks can sometimes dramatically reduce the number of lines required for a given operation. I'm not a fan of compactness just for compactness' sake, but I do appreciate tricks that improve readability.

Thursday, July 15, 2010

Consultant Tools Series: TrueCrypt

One is an independent CPA that has client information in his tax preparation and accounting software. Another is involved in high stakes business transactions and legal proceedings, and travels to dozens of countries every year to meet with investors, attorneys, and governments. Another friend has a business that provides health insurance, retirement plans, and other employee benefits to businesses, and has to store a lot of very sensitive medical and financial information.

In the Dynamics GP world, the most sensitive data that I've worked with are client databases with full HR and Payroll records, or client data files that have confidential employee information, including name, address, phone, SSN, etc. Just today I received some files containing employee information for an HR and Payroll integration to Dynamics GP, so I immediately wanted to encrypt the files.

While there is no single answer to the question of how such confidential information should be managed, when most people think of securing such data, they often use two phrases: "password protect" and "encrypt". It's a good start, but that's often the limit of their knowledge.

Before discussing details, I usually ask the person what concern or risk they are trying to address. How sensitive or confidential is the data? Is it only of interest to competitors? Would criminals want it? Would law enforcement want it? Would lawyers or private investigators want it? Would a government want it?

Most people just don't want the data wandering around publicly, and don't want it exposed if a computer is hacked or stolen. But some people do legitimately need to ensure that certain files cannot be accessed by a government agency, even if the computer is confiscated.

Although there are probably lots of different options, I usually offer the following choices:

1) Put a password on the Excel or Word file. This is usually adequate to prevent inadvertent disclosure of sensitive information, such as a list of tentative pay raises, bonuses, or terminations at a company. The passwords on Microsoft Office files can either be stripped out or cracked by various software packages, so the Office passwords only provide a low level of security. And one significant downside is that each file must have a password, so if you use different passwords, don't access the file regularly, or have to open a file that is several years old, it is common for people to forget the password (myself included).

2) Use WinZip or WinRAR to compress one or more files, and then use a password on the Zip or RAR file to encrypt and secure the files. This has some benefits, such as being able to secure multiple files with one password, and the ability to secure files that don't have their own encryption (like a CSV or text file). But such passwords really aren't any more secure than an Office file password, as password crackers can attack zip files as well. Another downside to using WinZip with a password is that although the compressed files may be encrypted, anyone who opens the zip file can see its contents, which I personally don't like.

3) If those basic options are insufficient, I then jump straight to TrueCrypt. TrueCrypt is a free, open source encryption application that provides very secure on-the-fly file and disk encryption. It was created in 2004, and is widely recognized as one of the best disk encryption options available today. The fact that it is free and open source means that I don't have to purchase upgrades as new versions of Windows are released, as encryption techniques change, or as the software vendor goes bankrupt or is acquired like at least one of the other disk encryption products I have tried.

TrueCrypt is available for Windows, Mac, and Linux, and if you review the features and documentation, I think you'll see how seriously the product addresses security.

TrueCrypt recently made news when the Brazilian government and the FBI were unable to crack hard drives encrypted using TrueCrypt. While few people need to legitimately hide their data from such organizations, it's reassuring to know that the solution works when used properly.

There are a few key things that I like about TrueCrypt.

1) It is very easy to use. Even if you don't understand how it works, the TrueCrypt beginner's tutorial walks you through the very simple process of creating a new encrypted container. Once that container is setup, it's simply a matter of mounting the file and entering a password, and you have a new drive letter in Windows.

2) Because it is volume based, many applications can use TrueCrypt volumes transparently. For small businesses or CPAs that run Lacerte tax software or QuickBooks, it's very simple to have applications read and write all of their data and files to a TrueCrypt volume, just like any other hard drive.

3) TrueCrypt doesn't require extra steps to encrypt data. Because it encrypts and decrypts data on the fly as it is read and written to disk, there are no extra steps to secure the data, which is a huge benefit for a non-technical user. Once the volume is dismounted, it just becomes an anonymous file on your hard drive.

4) TrueCrypt supports "key files" in addition to passwords. Instead of trying to remember multiple 10-20 character secure passwords, you can use just about any file, or combination of files, to serve as your 'password'. If you have a hundred MP3 or JPG files on your computer, you can randomly choose one or more of those files to serve as your key. Just make sure to keep those MP3 or JPG files safely backed up!

5) Once you have your TrueCrypt container setup, you can use online backup services to back it up in the cloud, without worrying about whether the backup provider is really encrypting your data or has adequate safeguards to ensure that your data can't be viewed.

This morning, after receiving a CSV file containing the data for over 900 employees, I immediately created a new TrueCrypt container and then moved the files to the mounted TrueCrypt volume. It took maybe 2 minutes, literally, and that data is now encrypted, maintenance free.

In this case, I chose to use a standard password for the container, and then stored that password in a RoboForm secure note for safe keeping, since it may be days or weeks before I need to access the files again.

And with that, YCpRz37dTkC4Vh5PLIjuyQmBslgBB4/Oy+LPGjajHao=

(That's "Have a good weekend!", in 128-bit AES)

Wednesday, July 14, 2010

Depositing Direct Deposit Information Directly

So, okay, fine. We use integration manager to import a lot of data including employee maintenance info, deductions, benefits, and pay codes. But what about direct deposit information? This is critical and subject to entry mistakes, so even if keying is possible...I don't know that I want to risk an incorrect account number or routing number. So, I use table import.

DISCLAIMER :) Always thoroughly test table imports in a test environment first, and also always make a backup prior to performing a table import.

Generally, I am not a fan of table imports because they can be prone to issues if you aren't completely confident of the table and field requirements. But this is one I have done repeatedly and with great success. Thousands of records can be loaded in seconds, and you can avoid carpal tunnel from the manually entry :)

Table import is located under Microsoft Dynamics GP>>Tools>>Integrate>>Table Import. And it should be noted that the import definitions are stored locally in the SY50o00.dat, SY50000.idx, SY50100.dat, and SY50100.idx files stored in \Program Files\Microsoft Dynamics\GP\Data. So if you plan to use the table import from more than one machine, you will need to copy these files from the machine used to create the import. Also, make sure you back up these files after creating the import in case they are accidentally overwritten during a new installation or are lost when a workstation is replaced.

To import to Cards>>Payroll>>Direct Deposit, you must create two table imports. One for the header and one for the detail record.

The destination table for the header record is Direct Deposit Employee Master. And the upload file should contain one record per employee with the Employee ID. The file can then be mapped to the header fields as follows:

- Employee ID= Employee ID (from file)

- Inactive= 0 (Constant)

- DD Remainder=1 (Constant, always will use first account for remainder of DD. Can be supplied in file instead if different settings are needed.)

- Index=0 (Constant)

Now for the detail records, the destination table is the Direct Deposit Employee Accounts Master. The upload file should contain the employee ID, index (incremental per employee, so if the employee has two accounts then the first one would be labeled 1 for the index and the second one would be 2 and so on), percentage (make sure this is formatted as a number like 10 for 10%), amount (only one of these fields should be completed for each line- percentage or amount), account number, routing number, type (23 for checking, 33 for savings). The file can then be mapped as follows for the import:

- Employee ID=Employee ID (from file)

- Index=Index (from file)

- Inactive= 0 (Constant)

- Deduction Method= 0 (Constant)

- Deduction= -none-/blank (Constant)

- DD Prenote= 1 (Constant)

- DD Prenote Count= 1 (Constant)

- DD Percent= Percentage (from file)

- DD AmountDir=Amount (from file)

- DD Account Number= Account Number (from file)

- DD Transit Number= Routing Number (from file)

- DD Transaction Code= Type (from file)

After importng, I run check links (Microsoft Dynamics GP>>Maintenance>>Check Links>>select Payroll) on the Payroll Direct Deposit Master. The records will have a status of Prenote (Cards>>Payroll>>Employee) and when you are ready to make all records active you can run the following script against the company database in SQL Server Management Studio.

UPDATE DD00200 SET DDPRE=0

This will update all direct deposit records to an Active status.

Hope some of you find this helpful, and as always, please share your comments, questions, and experiences.

SQL Server Backups: A Public Service Announcement

But let me start with an interesting story, and then share why I'm yet again addressing this very tired subject of SQL Server backups.

A few years ago, I was working with a client located in downtown Los Angeles. They are a retailer with over $100m in revenues. After implementing their Dynamics GP system, I noticed that we were running low on disk space for the SQL Server backups, and inquired about getting more space, and making sure that the backups were being copied from the server to a secondary archive location.

I learned that the SQL Server backups on the local server hard drive were the only backups that we had. The files were not being copied to tape. Not to SAN. Not to removable storage. When I asked about getting the GP backups included in the enterprise backup schedule and off site backups, things got more interesting. I was told that the company effectively had no backup solution in place. At all. I was told something about interviewing new enterprise backup vendors and it was taking a long time and they hadn't yet made a decision and they weren't sure when they might have a solution, etc., etc.

In disbelief (or so I thought), I spoke with a senior executive, who I'll call Ed, who headed up their internal application development. He told me a great story that was so ironic I had to redefine my definition of disbelief.

Rewind to 1992. The company was much smaller and back then Ed was apparently the main IT guy at the company, handling everything from hardware, to application development, to backups. Each night, he would grab the backup tape, stick it in his pocket, and go home. On the historic day of April 29, 1992, Ed was doing some coding and typical work, just like any other day.

But then some coworkers asked him to come look at the television. The staff huddled around the TV, watching the beginning of the Los Angeles Riots that followed the Rodney King trial. Wow, that's pretty amazing and scary, they thought, but they eventually went back to their work. A while later, someone noticed that the TV news was showing pictures of looting and rioting a few blocks away from their offices. They were able to see live video of buildings that they drove by every day on their way to work.

Seeing how close the activity was to their office, they realized that the situation was pretty serious, and they decided that they should have everybody leave the building and go home for the day. Ed was planning on going home, but he wanted to finish up a few last things. As his coworkers nagged him to leave, he grabbed his keys and some papers and started to leave his office. He paused, and walked back into his office to grab the nightly backup tape like he did every night. He casually dropped the tape in the pocket of his hawaiian shirt and then drove home.

By the next day, he learned that the company's entire office building had been burned to the ground in the riots. After getting over the shock and the implications of losing their entire building, he realized that the little tape that he took home that night in his shirt pocket was the only backup of the company's data. He eventually had to rebuild the company's IT infrastructure and data based on that one backup tape.

Ed then explained that he definitely knew the importance of having backups. Yet, I explained, 15 years later, his company doesn't have any backups. He tried to lobby to get some attention on their backup solution, but it wasn't his responsibility. Several months and many pleas later, still no backups.

So fast forwarding to this year, I worked on a project with a client that had a pretty massive GP company database--over 130GB. As the database grew, the server started to run out of local disk space and the backups were failing, since a week of backups was starting to consume nearly a terabyte of disk space. After a few weeks of this, the client finally added disk space and eventually had to move the SQL backups to a new storage device to accommodate the massive backup files.

All is well, right? Well, when I recently went to verify something on the server, I happened to check the Event Viewer to look for some errors. What I found were dozens of critical SQL Server errors that had been occurring for days. I/O errors. Operating system write failures. Some pretty scary looking stuff.

I discussed the errors with the GP partner, and they indicated that the backups were the responsibility of the client's IT team, who was supposed to be monitoring them.

If you were to look at the drive that stored the backup files, you would see nice neat looking bak files, as if the SQL backups were working properly. But you also need to check the server logs to see if any problems occurred. The backup routine may have written 129GB out of 130GB to a backup file and then failed, leaving you with a potentially useless backup.

Yes, it's a bit of a hassle, and yes it's monotonous to monitor something that usually works properly, and yes the chances of needing to restore from backup are usually remote, but would you rather have the excitement of not having a backup when you needed one? Or would you rather be the person who made sure that you had that backup tape in your shirt pocket just in case?

Monday, July 5, 2010

Completely Removing a Posted SOP Invoice From Dynamics GP

They imported SOP invoices with non-inventory line items from their operations system to GP, and each invoice number matched the project number from their operations system, keeping things nice and tidy. Apparently an employee found a mistake in one of the imported invoices, and instead of deleting the invoice and reimporting it before posting, the employee voided the receivables transaction associated with the invoice after it had been posted. Because the voided transaction was using the invoice number in GP, they were unable to re-import the corrected invoice with the same invoice number. There were probably a few alternatives to re-using the same invoice, but those would cause some problems for them down the road when it came to their reporting and reconciliation.

I recall asking a few colleagues how the voided invoice (and its number) could be completely removed from GP, and I think one or two people told me that it couldn't be done (or they didn't know how), perhaps short of SQL deletes and updates. But after checking with a few gurus and poking around some rarely used GP windows, I came up with this relatively long, somewhat tedious, but apparently effective method.

I'm posting this because I'm working on an integration and recently had to remove some invoice numbers from my test database for testing. I was able to use this process to remove the invoice numbers and complete my testing.

One disclaimer is that I haven't looked at this procedure since 2006, and haven't bothered to see if there is a better, more efficient, or different way to do it. I suspect there might be a different or simpler way, and welcome any comments with suggestions. I didn't find any problems with this approach, and the client didn't seem to encounter any issues, but I am guessing that there could be some negative consequences to this approach for certain clients depending on how they use GP and SOP invoices (i.e. if they use inventory items, unlike my client). So this is by no means a universal guaranteed solution.

And finally, there are a few caveats about this process. First, it involves several steps that must all be performed properly to avoid deleting data accidentally. Second, it requires that you perform the process on each document you wish to remove--it shouldn't simply be run for a customer or range of customers. Third, it involves removing data completely from GP, as in permanently deleted.

Because of these risks, I strongly recommend that you make a complete backup of your GP databases before attempting this, and also make sure to test the process on a test database first so that you confirm all of the steps and data values.

=============================================

This procedure provides instructions on how to completely remove a sales order invoice that was posted and then voided through the Receivables Posted Transactions Maintenance. (Transactions -> Sales -> Posted Transactions)

Verify the transaction under the Receivables Transaction Inquiry window (Inquiry -> Sales -> Transactions by Customer)

The invoice should be listed as an OPEN* and Voided transaction (as indicated by the Asterisk next to Open).

Make sure that all checkboxes are checked in the Maintain History section. This specifies that Great Plains will move these transactions from Open to History and keep them rather than delete them.

The Paid Transaction Removal process will move the document from an OPEN status to HISTORY status.

Open the Paid Sales Transaction Removal process (Tools -> Routines -> Sales -> Paid Transaction Removal). Note that this process can only be run on the Customer, and not on a single transaction.

1. Select the Customer ID in the From and To fields

2. Leave Class ID set to All

3. In the Remove section, the Void checkbox must be checked

4. The other checkboxes can be checked if desired, but are not necessary

5. Leave Balance Forward Consolidation unchecked

6. Check Print Register

7. Click on the Process button

8. A "Remove Transactions" confirmation dialog will appear—click Yes

9. Print the register to screen (and printer if desired) to confirm that the documents were properly removed

When this process is complete the document should have been moved from OPEN to HISTORY.

Now that the voided document has been moved to history, the Receivables portion of the transaction can be removed from the database.

Open the Remove Receivables Transaction History window (Tools -> Utilities -> Sales -> Remove Transaction History).

1. Change the Range to Document Number

2. Enter the sales invoice number in the From and To fields. Do NOT use a customer number.

3. Click on the Insert Button

4. Check all checkboxes at the bottom left corner of the window

5. Click on the Process button

The document will no longer be listed in the Transaction Inquiry window.

The final step is to remove the Sales portion of the transaction from the database.

You can see that even though the receivables document no longer appears in the Receivables Transaction Inquiry window, you can still see the sales document in the Sales Order Processing Document Inquiry (Inquiry -> Sales -> Sales Documents)

To remove the sales document, go to Tools -> Utilities -> Sales -> Remove Sales History.

Choose a Range of Document Number, enter the document number in the From and To fields and click on the Insert button.

Do NOT use a customer number for the range.

Make sure that all checkboxes are checked at the bottom of the window.

Click on the Process button

Click on Redisplay in the SOP Document Inquiry window and the document should no longer be listed:

The above process removes all of the document records from the GP database; however, if sales tax is used, there are a few ancillary sales tax records that are not removed during this process.

This step must be performed by a Database Administrator.

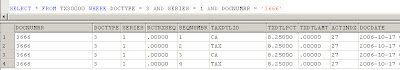

Run the following select statement on the company database in question:

SELECT * FROM TX30000 WHERE DOCTYPE = 3 AND SERIES = 1 AND DOCNUMBR = 'XXXX'

(change XXXX to the RO number / document number for the voided invoice)

You should see two records for each SOP line item:

If these records match the voided transaction, they need to be manually deleted.

DELETE FROM TX30000 WHERE DOCTYPE = 3 AND SERIES = 1 AND DOCNUMBR = 'XXXX'

After this step is completed, you will be allowed to re-use the invoice number again.