Dynamics GP eConnect has a "feature" called Transaction Requester that, among other things, allows it to compile a list of all records or transactions that have been inserted, updated, or deleted in Dynamics GP.

eConnect Requester fundamentally consists of SQL Server database triggers that monitor changes to the Dynamics GP database tables and record any inserts, updates, or deletes that are made to that table. When the triggers detect a change, they write a record to the eConnect_Out table.

If you want, you can also use the eConnect Outgoing Service, which can output XML files or send XML to a queue in MSMQ.

eConnect Requester is a feature that is very valuable for integrations where Dynamics GP is the system of record and data needs to be sent from Dynamics GP to an external system. For instance, if an external system needs the ability to create purchase requisitions, you may want to automatically send all new or updated vendor records and inventory items from GP to that system so that they only need to be maintained in GP. Or if you use a third-party logistics vendor or fulfillment center, you may need to send purchase orders or sales invoices to those facilities so that they can receive and ship your products.

This all sounds great, and in concept it is, but there is one significant fundamental limitation to eConnect Requester. If you look at the eConnect_Out database table, you will see that it really only tells you which table was updated, the index value (document number) for the record, and whether the record was inserted, updated, or deleted.

This approach typically works fine for two types of database records: Master records, such as customers, vendors, items, GL accounts, etc., and transaction records that are posted and fully committed, such as posted GL journal entries, posted vouchers, posted receivables transactions, etc.

This means that eConnect Requester has some drawbacks with transactions that are not posted, such as Purchase Orders. Purchase Orders are somewhat unique because they can be created, saved, edited, approved, printed, released, changed, printed again, voided, and deleted. This is a challenge because eConnect Requester doesn't track these higher level document "states" or status--it only tells you whether a new record has been inserted into a table, whether a record has been updated, or whether a record has been deleted.

This is a limitation / challenge / problem when you have to export purchase orders. Let's say that you are sending purchase orders to an external 3PL warehouse that will be receiving your inventory. The warehouse needs to receive any new purchase orders that have been entered into Dynamics GP. But not every PO. You probably don't want to send unapproved POs. And you probably don't want to send unreleased POs. So when your export process sees a PO record in the eConnect_Out, you can't just assume that it is ready to be exported. You have to check the status first and determine whether the PO has been approved and released.

So what if the PO is then changed? Maybe a line is added, another line is cancelled, and one or more quantities are updated. Your 3PL warehouse needs to know about these changes so that they can receive against accurate purchase orders. The warehouse doesn't want the entire PO sent to them after every change--they only want the changes sent to them.

Quickly you will learn that eConnect Requester can't tell you about any of those things. Even though Purchase Order Line (POP10110) is an option in the Requester Setup, it turns out that the Requester only tells you that a PO record in the POP10100 table has been updated. You have to figure out what changed on that purchase order and whether that change needs to be sent to the 3PL warehouse. And unfortunately, eConnect requester offers no help there.

One approach is to store a copy of every PO line that has been exported. That way you essentially have a full copy of the PO and all of its lines and quantities, so if anything changes on the PO, you can compare the old values to the current values to determine what has changed. This may seem like a fair amount of overhead just to export POs, but it isn't difficult, works well, and has the benefit of serving as an audit trail for the export process, allowing you to verify that records were exported properly. But, you have to write the queries and code that will perform all of the comparisons, and handle all of the logic to determine what to export when a PO is changed. Trust me when I say that this can get very, very tricky for reasons you would least expect.

Although this "limitation" does seem burdensome, eConnect Requester can't fulfill all requirements, so it fulfills the fundamental and basic ones, and you are free to develop a solution that meets your specific business requirements.

Overall, eConnect Transaction Requester is a great, reliable resource for automating the exporting new data from Dynamics GP--just make sure that you are aware of the limitations before you jump into a project that requires it.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

My blog has moved! Please visit the new blog at: https://blog.steveendow.com/ I will no longer be posting to Dynamics GP Land, and all new posts will be at https://blog.steveendow.com Thanks!

Friday, September 30, 2011

Dynamics GP Reminders Make GP Load Very Slowly

This week I have been working with a client who is preparing a new SQL Server in preparation for their Dynamics GP 2010 upgrade.

One strange behavior that he observed is that when he launched Dynamics GP 2010 directly on the SQL Server, it would take over 5 minutes to load. During that time, the GP window would be blank and have the famous "Not Responding" message in the title bar.

However, when GP was launched on a new Terminal Server, it "only" took about 100 seconds to load. Since we were troubleshooting other issues with the SQL Server, we thought that the server might be the problem. But after running SQL Profiler and tracing the Dynamics GP activity during the load process, we discovered that GP was running over 50,000 queries against the database before the application was usable. Once the queries stopped, GP seemed fine.

We traced the activity when launching GP on the Terminal Server, and the same 50,000 queries were being run, but for some reason it took less than 2 minutes for GP to fully launch on that server.

What originally seemed like a SQL Server issue now seemed to be a GP issue.

Reviewing the queries, we saw that they were against the service contract table, and GP was requesting every single record in that table, one record at a time. After scratching his head for a while, the client realized that the sa user had a reminder related to service contracts. Sure enough, after deleting that reminder, GP loaded fine, and loaded quickly.

This issue isn't new, but because the symptoms can vary dramatically from machine to machine, and because reminders for one GP user can be completely different than reminders for another user, it isn't always obvious that reminders are causing the problem. And when this client's reminder was originally setup, it may have worked fine, but as they added thousands of contracts over the years, it would have gradually caused GP to become slower and slower.

I'm not a fan of the Reminder pop-up window that appears after login anyway, so after seeing how certain reminders can cripple GP, it is just another reason to avoid their general use.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

One strange behavior that he observed is that when he launched Dynamics GP 2010 directly on the SQL Server, it would take over 5 minutes to load. During that time, the GP window would be blank and have the famous "Not Responding" message in the title bar.

However, when GP was launched on a new Terminal Server, it "only" took about 100 seconds to load. Since we were troubleshooting other issues with the SQL Server, we thought that the server might be the problem. But after running SQL Profiler and tracing the Dynamics GP activity during the load process, we discovered that GP was running over 50,000 queries against the database before the application was usable. Once the queries stopped, GP seemed fine.

We traced the activity when launching GP on the Terminal Server, and the same 50,000 queries were being run, but for some reason it took less than 2 minutes for GP to fully launch on that server.

What originally seemed like a SQL Server issue now seemed to be a GP issue.

Reviewing the queries, we saw that they were against the service contract table, and GP was requesting every single record in that table, one record at a time. After scratching his head for a while, the client realized that the sa user had a reminder related to service contracts. Sure enough, after deleting that reminder, GP loaded fine, and loaded quickly.

This issue isn't new, but because the symptoms can vary dramatically from machine to machine, and because reminders for one GP user can be completely different than reminders for another user, it isn't always obvious that reminders are causing the problem. And when this client's reminder was originally setup, it may have worked fine, but as they added thousands of contracts over the years, it would have gradually caused GP to become slower and slower.

I'm not a fan of the Reminder pop-up window that appears after login anyway, so after seeing how certain reminders can cripple GP, it is just another reason to avoid their general use.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Thursday, September 29, 2011

How do you cope with the torrent of email?

Several years ago I was out of town at a conference and I didn't have any internet access during the day. This was before smart phones were in the hands of every teenager, and before 3G wireless internet cards or personal hotspots were common. And I was staying at a cheap hotel that at the time didn't provide internet access. (Gasp! Can you imagine!?!?)

Yes kids, it was the Stone Age.

Anyway, after my conference and dinner, I headed to the nearby Starbucks around 8:45pm to use their (then paid) WiFi service. I fired up my laptop, got connected to the internet, and then opened Outlook to deal with the day's e-mail.

Over 100 e-mail messages slammed into my Inbox, and then the Starbucks employee informed me they were closing in a few minutes. I sat in my rental car in the parking lot in front of Starbucks, with a weak WiFi signal, trying to respond to messages. After a few minutes, I realized it was futile. That was when I realized that there was a specific limit to the number of e-mails that I could handle in a day. It's an arbitrary number, but 100 a day is where things definitely start to fall apart.

If I were doing nothing else but handling my e-mail, I could easily handle the messages coming in every 5 minutes. And sure, many are probably the dreadful CC messages that infect every Inbox. But the ones that required my attention would still potentially take several minutes to respond to and fill my day.

So now let's head back to the real world where I actually have to get lots of real work done completely independent of e-mail, and where I am not paid solely to pounce on every e-mail message as soon as that little Outlook envelope icon appears in my system tray.

Today I was away from my computer at meetings for 3 hours and I received 30 e-mails during that period. My iPhone was vibrating constantly during my meetings and while I was driving. 30 messages may not sound like a lot, but I have so much actual work piled up with looming deadlines that even if I didn't touch my e-mail at all, my day (and evening!) would be completely full. Consider the 75 e-mail messages that I received today, and yesterday, and the day before, and I have a pile of e-mail on my hands. I've received over 1,400 messages so far this month, and although I know there are people who receive far more than that, managing all of those messages represent a major "time suck" for me.

So why am I writing a blog post instead of doing work? Valid question. I thought I would share a few of the practices that I have used over the last year to try and deal with the endless stream of e-mail that I receive. Collectively, these practices obviously aren't enough to fully resolve my dilemma, but every little bit helps.

1. Unsubscribe. I receive the usual marketing e-mail from dozens of companies, just like everyone else. For the companies I have used or liked, I used to tolerate their e-mails thinking that I might find something interesting. No longer. Unless I actively receive value from the marketing e-mails, I unsubscribe. If I don't have time to deal with all of my work or client e-mail, I don't need 10 different digests from Linked In groups that I never read.

2. No More Folders. I used to fastidiously organize my e-mail into folders by client and sometimes by project. I had dozens and dozens and dozens of folders. Naturally, I thought I was just being organized, just in case I needed to find that one message for that one project. I never realized how much time and mental energy I was expending on that useless task. Based on the recommendation of a friend, I got rid of all of them. Other than my Inbox, I now have one folder called "Read", which exists solely to get messages out of my Inbox. Unless I have to specifically reply or somehow follow up on that e-mail, I glance at it, and it then goes straight to the one and only Read folder to get it out of my Inbox. If I ever need to find an e-mail, I will either use the Outlook Search feature (not so great), or X1 Professional Client Desktop Search (excellent, but not perfect).

3. Quick Steps: Outlook 2010 has a feature called Quick Steps (a rebranded version of what is available in earlier versions of Outlook). It allows you to define a "macro" of sorts to perform organizational operations on your e-mail. I created a Quick Step called "Read" that will send a message to my single Read folder when I press CTRL+SHIFT+9. I got so sick of dragging messages to the folder using my mouse that I finally looked around and found that Quick Steps would let me do it with a keyboard shortcut. Now I don't even have to touch the mouse to file messages with a vengeance.

4. Categories: I started using Outlook Categories to try and help me prioritize my e-mail. Although Categories is a nice theoretical concept, it only works if you actually have time to deal with all of the e-mail that you have categorized. I can categorize my messages, but when the e-mails arrive faster than I can clear out the categorized messages, it's a futile exercise and the categories just grow and grow. I'll categorize 10 e-mails, but as I start doing actual work on one of the high priority items (or some other actual work), 10 more e-mails arrive, making my categorized list that much longer. I end up only being able to deal with high priority e-mails, and the "Follow Up" and "Personal" categories just grow indefinitely and collect dust.

5. Turn Off Notifications: After installing Outlook, the first thing I do is turn off the annoying Outlook desktop notification messages that appear every single time an e-mail arrives. What a horrible feature that is designed to distract people and keep them from doing any productive work. I then disable the sound that Outlook makes when a new message arrives. Now I only have the little envelope icon that appears in the system tray, and I'm considering disabling that.

6. Define "Email Time" and "Email Free Time": If I were to respond to emails as they arrive, I would be constantly interrupted. Even if I just have to glance at an e-mail and file it away, it takes may attention away from the proposal, the code, the estimate, the query, the report, or whatever billable task I'm working on. And if I start to reply to some messages, which is a bad habit I have, then before I know it, 5, 15, or 30 minutes slips by while my real work is left unattended. Now, when I have to focus on certain tasks, I now close Outlook entirely for several hours at a time. I also close my web browser, just to minimize the opportunities for distraction.

7. Smart Phone: When I got my first Blackberry, I thought that it would be handy to send or receive the occasional e-mail. It was definitely handy, but one thing it made me realize was how much waiting I did when I was out of the office. I realized that at a minimum, I could file away messages while in line for 2 minutes at Starbucks, or while waiting for my order at lunch, and especially while sitting at the airport. Even if you don't compose any e-mail or actively deal with a task related to your e-mail, you can at least file away the messages that don't need to be cluttering up your Inbox, saving some time when you return to your computer.

8. Spring Cleaning: After sever months of growth, my Inbox will be littered with old threads that I either took care of and forgot to file, or are now obsolete. Occasionally I'll take time to sort messages by sender, quickly scan them, and then file them en masse, clearing out my Inbox to a handful of messages. An empty Inbox is a great feeling. After it's done, I e-mail a few colleagues to brag about how few messages I have in my Inbox--and of course they then reply a dozen times to clutter it up again.

I know there are many tools available for managing e-mail, and even more tips and techniques and preferences, but going back to my original point, when the volume of incoming e-mail exceeds a certain threshold, and when I have lots of actual work to do, it eventually overwhelms me.

How do you cope?

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Yes kids, it was the Stone Age.

Anyway, after my conference and dinner, I headed to the nearby Starbucks around 8:45pm to use their (then paid) WiFi service. I fired up my laptop, got connected to the internet, and then opened Outlook to deal with the day's e-mail.

Over 100 e-mail messages slammed into my Inbox, and then the Starbucks employee informed me they were closing in a few minutes. I sat in my rental car in the parking lot in front of Starbucks, with a weak WiFi signal, trying to respond to messages. After a few minutes, I realized it was futile. That was when I realized that there was a specific limit to the number of e-mails that I could handle in a day. It's an arbitrary number, but 100 a day is where things definitely start to fall apart.

If I were doing nothing else but handling my e-mail, I could easily handle the messages coming in every 5 minutes. And sure, many are probably the dreadful CC messages that infect every Inbox. But the ones that required my attention would still potentially take several minutes to respond to and fill my day.

So now let's head back to the real world where I actually have to get lots of real work done completely independent of e-mail, and where I am not paid solely to pounce on every e-mail message as soon as that little Outlook envelope icon appears in my system tray.

Today I was away from my computer at meetings for 3 hours and I received 30 e-mails during that period. My iPhone was vibrating constantly during my meetings and while I was driving. 30 messages may not sound like a lot, but I have so much actual work piled up with looming deadlines that even if I didn't touch my e-mail at all, my day (and evening!) would be completely full. Consider the 75 e-mail messages that I received today, and yesterday, and the day before, and I have a pile of e-mail on my hands. I've received over 1,400 messages so far this month, and although I know there are people who receive far more than that, managing all of those messages represent a major "time suck" for me.

So why am I writing a blog post instead of doing work? Valid question. I thought I would share a few of the practices that I have used over the last year to try and deal with the endless stream of e-mail that I receive. Collectively, these practices obviously aren't enough to fully resolve my dilemma, but every little bit helps.

1. Unsubscribe. I receive the usual marketing e-mail from dozens of companies, just like everyone else. For the companies I have used or liked, I used to tolerate their e-mails thinking that I might find something interesting. No longer. Unless I actively receive value from the marketing e-mails, I unsubscribe. If I don't have time to deal with all of my work or client e-mail, I don't need 10 different digests from Linked In groups that I never read.

2. No More Folders. I used to fastidiously organize my e-mail into folders by client and sometimes by project. I had dozens and dozens and dozens of folders. Naturally, I thought I was just being organized, just in case I needed to find that one message for that one project. I never realized how much time and mental energy I was expending on that useless task. Based on the recommendation of a friend, I got rid of all of them. Other than my Inbox, I now have one folder called "Read", which exists solely to get messages out of my Inbox. Unless I have to specifically reply or somehow follow up on that e-mail, I glance at it, and it then goes straight to the one and only Read folder to get it out of my Inbox. If I ever need to find an e-mail, I will either use the Outlook Search feature (not so great), or X1 Professional Client Desktop Search (excellent, but not perfect).

3. Quick Steps: Outlook 2010 has a feature called Quick Steps (a rebranded version of what is available in earlier versions of Outlook). It allows you to define a "macro" of sorts to perform organizational operations on your e-mail. I created a Quick Step called "Read" that will send a message to my single Read folder when I press CTRL+SHIFT+9. I got so sick of dragging messages to the folder using my mouse that I finally looked around and found that Quick Steps would let me do it with a keyboard shortcut. Now I don't even have to touch the mouse to file messages with a vengeance.

4. Categories: I started using Outlook Categories to try and help me prioritize my e-mail. Although Categories is a nice theoretical concept, it only works if you actually have time to deal with all of the e-mail that you have categorized. I can categorize my messages, but when the e-mails arrive faster than I can clear out the categorized messages, it's a futile exercise and the categories just grow and grow. I'll categorize 10 e-mails, but as I start doing actual work on one of the high priority items (or some other actual work), 10 more e-mails arrive, making my categorized list that much longer. I end up only being able to deal with high priority e-mails, and the "Follow Up" and "Personal" categories just grow indefinitely and collect dust.

5. Turn Off Notifications: After installing Outlook, the first thing I do is turn off the annoying Outlook desktop notification messages that appear every single time an e-mail arrives. What a horrible feature that is designed to distract people and keep them from doing any productive work. I then disable the sound that Outlook makes when a new message arrives. Now I only have the little envelope icon that appears in the system tray, and I'm considering disabling that.

6. Define "Email Time" and "Email Free Time": If I were to respond to emails as they arrive, I would be constantly interrupted. Even if I just have to glance at an e-mail and file it away, it takes may attention away from the proposal, the code, the estimate, the query, the report, or whatever billable task I'm working on. And if I start to reply to some messages, which is a bad habit I have, then before I know it, 5, 15, or 30 minutes slips by while my real work is left unattended. Now, when I have to focus on certain tasks, I now close Outlook entirely for several hours at a time. I also close my web browser, just to minimize the opportunities for distraction.

7. Smart Phone: When I got my first Blackberry, I thought that it would be handy to send or receive the occasional e-mail. It was definitely handy, but one thing it made me realize was how much waiting I did when I was out of the office. I realized that at a minimum, I could file away messages while in line for 2 minutes at Starbucks, or while waiting for my order at lunch, and especially while sitting at the airport. Even if you don't compose any e-mail or actively deal with a task related to your e-mail, you can at least file away the messages that don't need to be cluttering up your Inbox, saving some time when you return to your computer.

8. Spring Cleaning: After sever months of growth, my Inbox will be littered with old threads that I either took care of and forgot to file, or are now obsolete. Occasionally I'll take time to sort messages by sender, quickly scan them, and then file them en masse, clearing out my Inbox to a handful of messages. An empty Inbox is a great feeling. After it's done, I e-mail a few colleagues to brag about how few messages I have in my Inbox--and of course they then reply a dozen times to clutter it up again.

I know there are many tools available for managing e-mail, and even more tips and techniques and preferences, but going back to my original point, when the volume of incoming e-mail exceeds a certain threshold, and when I have lots of actual work to do, it eventually overwhelms me.

How do you cope?

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Wednesday, September 28, 2011

Hidden Gem- Check Distribution Report

Do you need a report to show you the invoices that were paid by a particular check? How about viewing the distributions associated with the invoices that were paid by a particular check? These are fairly common requests, and often lead to a bit of head scratching for users. We look in SmartList, and there really isn't a great option (unless you have SmartList Builder-- but even then, you have to create it) since the relationship between a check and invoices can be one to many (one check can pay many invoices).

So when I get this question, and the client doesn't own SmartList Builder or they want a solution that is quick and easy, I point them to the Check Distribution Report.

I know that similar information can be displayed using SmartList Builder, but this report is a great option when you need a quick solution and/or you don't own SmartList Builder.

So when I get this question, and the client doesn't own SmartList Builder or they want a solution that is quick and easy, I point them to the Check Distribution Report.

Reports-Purchasing-Check Information-Check Distribution

Click New Option

When setting up the report option, you can restrict to a range of Vendor IDs, Dates, or Check Numbers. You can also select to Include Dist Types, to include only PURCH type distributions for example.

Check Distribution Report

The report displays each check followed by each invoice paid. The distributions appear directly below each invoice that is listed. This report can be quite handy when trying to track back checks to their associated invoices.

I know that similar information can be displayed using SmartList Builder, but this report is a great option when you need a quick solution and/or you don't own SmartList Builder.

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a supervising consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

Adding Employer Tax Fields To Payroll Check Register

We had an issue pop up this month that was a bit puzzling on the surface. When a client printed the payroll Check Register when the payroll posted, they were getting different results than when they reprinted the same report using Reports-Payroll-Reprint Journals-Check Register.

On the standard Check Register and Reprint Check Register reports, the Employer FICA taxes are totaled in to one field called Employer FICA Owed. However, on the client's reports, this amount was also broken out in to two additional fields for Employer FICA/Social Security and Employer FICA/Medicare. Given that these fields are not standard on the report, we knew (and confirmed through Microsoft Dynamics GP-Tools-Customize-Customization Maintenance) that the reports were modified.

In looking at these modified versions of the reports, we noticed that all of the fields matched with the exception of the Employer FICA/Social Security and Employer FICA/Medicare fields that were added to the reports. But, why would the fields be different? If the same fields were added to the reports? Well, that is the big IF.

So, I opened each report in Report Writer, and here is what I found. There are a total of four fields that store Employer FICA information, two for Social Security and two for Medicare. When working with the Reprint Check Register report, the fields are located in the Payroll Check History table (for the Check Register report, the table is Payroll Work Header): Employer FICA/Med Tax On Tips, Employer FICA/Medicare Withholding, Employer FICA/SS Tax On Tips, and Employer FICA/Social Security Withholding.

In the client's case, one report was modified to pull only the "withholding" fields while the other report was modified with calculated fields to combine the "withholding" field with the corresponding "tax on tips" field (e.g., Employer FICA/Medicare Withholding + Employer FICA/Med Tax On Tips). And because the client had employees with tips, the amount displayed for each of the FICA fields was off by the FICA on tips.

So, lesson learned, if you want to add the Employer FICA/Medicare and Employer FICA/Social Security to the payroll check register reports, make sure you either display all four fields or create calculated fields to summarize them appropriately.

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a supervising consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

On the standard Check Register and Reprint Check Register reports, the Employer FICA taxes are totaled in to one field called Employer FICA Owed. However, on the client's reports, this amount was also broken out in to two additional fields for Employer FICA/Social Security and Employer FICA/Medicare. Given that these fields are not standard on the report, we knew (and confirmed through Microsoft Dynamics GP-Tools-Customize-Customization Maintenance) that the reports were modified.

In looking at these modified versions of the reports, we noticed that all of the fields matched with the exception of the Employer FICA/Social Security and Employer FICA/Medicare fields that were added to the reports. But, why would the fields be different? If the same fields were added to the reports? Well, that is the big IF.

So, I opened each report in Report Writer, and here is what I found. There are a total of four fields that store Employer FICA information, two for Social Security and two for Medicare. When working with the Reprint Check Register report, the fields are located in the Payroll Check History table (for the Check Register report, the table is Payroll Work Header): Employer FICA/Med Tax On Tips, Employer FICA/Medicare Withholding, Employer FICA/SS Tax On Tips, and Employer FICA/Social Security Withholding.

In the client's case, one report was modified to pull only the "withholding" fields while the other report was modified with calculated fields to combine the "withholding" field with the corresponding "tax on tips" field (e.g., Employer FICA/Medicare Withholding + Employer FICA/Med Tax On Tips). And because the client had employees with tips, the amount displayed for each of the FICA fields was off by the FICA on tips.

So, lesson learned, if you want to add the Employer FICA/Medicare and Employer FICA/Social Security to the payroll check register reports, make sure you either display all four fields or create calculated fields to summarize them appropriately.

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a supervising consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

Friday, September 23, 2011

Gigabyte Motherboard Reboots Multiple Times Before Loading Windows

This is completely unrelated to Dynamics GP, but since I just resolved this issue after HOURS of frustration, I wanted to share it in case some other unfortunate individual is experiencing the same issue.

I recently built two new PCs. One desktop with a Gigabyte GA-Z68X-UD3H-B3 motherboard, and one "server" with a Gigabyte GA-Z68A-D3H-B3 motherboard. Both have Intel Core i7-2600 processors.

As always, after getting everything installed, the first thing that I did after powering them on was update the BIOS. I then set the BIOS CMOS settings the way I liked, and then went to install Windows.

But when I tried to install Windows, I noticed something strange. When the system was powered on, it would display the POST screen, then display the AHCI controller screen and drive listing, and then reboot. It would repeat this process and keep rebooting after the AHCI screen. Sometimes it would just start to load Windows, and then reboot, causing Windows to detect a boot failure. Sometimes it would reboot 3 times, sometimes 8 times, sometimes 2 times. Rarely just once. It would even reboot from within the BIOS configuration windows.

Something definitely felt wrong, but I didn't know where to start. I tried removing some memory, unplugging and disabling any peripherals, changing CPU and memory voltage settings. Nothing seemed to make the systems boot properly.

I even ordered 24 GB of new RAM, thinking that maybe the memory I purchased wasn't incompatible. But I received the new memory yesterday, plugged it in, but the multiple reboots still persisted.

Fortunately a friend of mine was available, so he stopped by to help me troubleshoot. After trying everything we could think of, with no success, he suggested that we try the "Reset to factory defaults" option in the BIOS. The Gigabyte boards have a "Reset to Fail Safe Defaults" option, so we tried that. Magically, the system would reboot fine.

After that, I was able to use the "Optimized" default settings and adjust the other options, and the machines worked fine.

It seems that after a BIOS update, some invalid values or corruption may exist in some of the CMOS settings. Resetting to a default configuration appears to clear all of those values, reset everything, and provide you with a stable configuration. I've never, ever used that option previously, so it would have never occurred to me to try it, but I'm now a believer. He said that he's seen that recommendation previously from some other motherboard manufacturers, so that is how he was aware of it.

So, the bottom line is: After updating your BIOS, make sure to reset to default values, and then reconfigure your settings.

And now I'm off to fill out the RMA form for the extra RAM that I didn't need...

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

I recently built two new PCs. One desktop with a Gigabyte GA-Z68X-UD3H-B3 motherboard, and one "server" with a Gigabyte GA-Z68A-D3H-B3 motherboard. Both have Intel Core i7-2600 processors.

As always, after getting everything installed, the first thing that I did after powering them on was update the BIOS. I then set the BIOS CMOS settings the way I liked, and then went to install Windows.

But when I tried to install Windows, I noticed something strange. When the system was powered on, it would display the POST screen, then display the AHCI controller screen and drive listing, and then reboot. It would repeat this process and keep rebooting after the AHCI screen. Sometimes it would just start to load Windows, and then reboot, causing Windows to detect a boot failure. Sometimes it would reboot 3 times, sometimes 8 times, sometimes 2 times. Rarely just once. It would even reboot from within the BIOS configuration windows.

Something definitely felt wrong, but I didn't know where to start. I tried removing some memory, unplugging and disabling any peripherals, changing CPU and memory voltage settings. Nothing seemed to make the systems boot properly.

I even ordered 24 GB of new RAM, thinking that maybe the memory I purchased wasn't incompatible. But I received the new memory yesterday, plugged it in, but the multiple reboots still persisted.

Fortunately a friend of mine was available, so he stopped by to help me troubleshoot. After trying everything we could think of, with no success, he suggested that we try the "Reset to factory defaults" option in the BIOS. The Gigabyte boards have a "Reset to Fail Safe Defaults" option, so we tried that. Magically, the system would reboot fine.

After that, I was able to use the "Optimized" default settings and adjust the other options, and the machines worked fine.

It seems that after a BIOS update, some invalid values or corruption may exist in some of the CMOS settings. Resetting to a default configuration appears to clear all of those values, reset everything, and provide you with a stable configuration. I've never, ever used that option previously, so it would have never occurred to me to try it, but I'm now a believer. He said that he's seen that recommendation previously from some other motherboard manufacturers, so that is how he was aware of it.

So, the bottom line is: After updating your BIOS, make sure to reset to default values, and then reconfigure your settings.

And now I'm off to fill out the RMA form for the extra RAM that I didn't need...

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Thursday, September 22, 2011

eConnect 2010 Error: The transaction has aborted

I have a client for which I have developed over a dozen eConnect integrations. They have been running on GP 9 for several years, and since the client recently upgraded to GP 2010, I upgraded them all a few months ago.

Although the upgraded integrations work properly, one issue that we have observed is that several of them receive an error occasionally that simply says "The transaction has aborted."

After trying several tests and various troubleshooting at the client, we submitted a support case. Aaron at Microsoft called me promptly to review the details, and after searching through his notes and databases, found that this appears to be an issue with eConnect 2010 that was resolved in Service Pack 2.

The client currently has eConnect 2010 Service Pack 1 installed, so I'm going to install the Post-SP2 June 2011 Hotfix (KB 2561289), which will bring eConnect up to version 11.0.1812, to see if that resolves the issue.

I'll add an update next week as to whether this resolves the issue.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Although the upgraded integrations work properly, one issue that we have observed is that several of them receive an error occasionally that simply says "The transaction has aborted."

This error is occurring with multiple imports for different entities and transaction types. For example, the error occurs when importing an inventory item, an AR cash receipt, an AR payment application, and a SOP invoice.

The error occurs intermittently and inconsistently, and the error does not seem to be related to any specific entity or transaction. For example, when importing 2,577 inventory items, one item received the error. If the same file is re-imported, a different item will receive the transaction aborted error.

All of the integrations are submitting each transaction individually, and each of the integrations usually processes thousands of transactions at a time.

If you check your eConnect Event Log, you will see two different messages related to the transaction aborted error.

Example 1:

Action: Create Transaction

Current User Name:

Input parameters:

Exception type: Microsoft.Dynamics.GP.eConnect.eConnectException

Exception message: The transaction has aborted.

Stack Trace:

at Microsoft.Dynamics.GP.eConnect.ServiceProxy.CreateTransactionEntity(String connectionString, String xml)

at Microsoft.Dynamics.GP.eConnect.eConnectMethods.EntityImportImplementation(String connectionString, String sXML, Boolean isTransaction)

at Microsoft.Dynamics.GP.eConnect.eConnectMethods.ProcessEntityImport(String connectionString, String sXML, Boolean isTransaction)

at Microsoft.Dynamics.GP.eConnect.eConnectMethods.CreateTransactionEntity(String ConnectionString, String sXML)

Example 2:

Action: Service Create Transaction

Current User Name: DELIVERYAGENT\greatplains

Input parameters:

Exception type: System.Transactions.TransactionAbortedException

Exception message: The transaction has aborted.

Stack Trace:

at System.Transactions.TransactionStateAborted.EndCommit(InternalTransaction tx)

at System.Transactions.CommittableTransaction.Commit()

at System.Transactions.TransactionScope.InternalDispose()

at System.Transactions.TransactionScope.Dispose()

at Microsoft.Dynamics.GP.eConnect.eConnectMethods.EntityImportImplementation(String connectionString, String sXML, Boolean isTransaction)

at Microsoft.Dynamics.GP.eConnect.eConnectMethods.ProcessEntityImport(String connectionString, String sXML, Boolean isTransaction)

at Microsoft.Dynamics.GP.eConnect.eConnectMethods.CreateTransactionEntity(String ConnectionString, String sXML)

at Microsoft.Dynamics.GP.eConnect.Service.CreateTransactionEntity(String ConnectionString, String sXML)

Exception type:

System.InvalidOperationException

Exception message:

The requested operation cannot be completed because the connection has been broken.

After trying several tests and various troubleshooting at the client, we submitted a support case. Aaron at Microsoft called me promptly to review the details, and after searching through his notes and databases, found that this appears to be an issue with eConnect 2010 that was resolved in Service Pack 2.

The client currently has eConnect 2010 Service Pack 1 installed, so I'm going to install the Post-SP2 June 2011 Hotfix (KB 2561289), which will bring eConnect up to version 11.0.1812, to see if that resolves the issue.

I'll add an update next week as to whether this resolves the issue.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

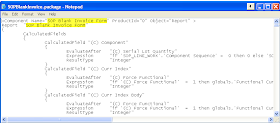

How to Serialize eConnect XML - Properly

I'm posting this for my own reference because I can never remember the full syntax and I regularly have to look it up.

I think I've seen at least 3 or 4 different code samples showing how to serialize eConnect XML in .NET.

One version, which I think is actually based on sample eConnect code that Microsoft provided years ago, writes the XML out to a file on the hard drive, then reads it back into memory. The first time I saw this my eyes rolled in disbelief. Clearly that is a terrible idea and is completely unnecessary.

The other versions, which I have used over the last few years, used in-memory techniques to serialize the XML using a combinations of a MemoryStream, XmlTextReader, and StringBuilder. Although these worked fine, the process to use an XmlTextReader and StringBuilder never seemed ideal.

The best example I've seen so far is the one that Brian Prince posted on the MBS Guru blog back in December 2010. The XML Document approach is simple and very clean, and it is the technique I've been using ever since he wrote about it.

Here is Brian's approach:

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

I think I've seen at least 3 or 4 different code samples showing how to serialize eConnect XML in .NET.

One version, which I think is actually based on sample eConnect code that Microsoft provided years ago, writes the XML out to a file on the hard drive, then reads it back into memory. The first time I saw this my eyes rolled in disbelief. Clearly that is a terrible idea and is completely unnecessary.

The other versions, which I have used over the last few years, used in-memory techniques to serialize the XML using a combinations of a MemoryStream, XmlTextReader, and StringBuilder. Although these worked fine, the process to use an XmlTextReader and StringBuilder never seemed ideal.

The best example I've seen so far is the one that Brian Prince posted on the MBS Guru blog back in December 2010. The XML Document approach is simple and very clean, and it is the technique I've been using ever since he wrote about it.

Here is Brian's approach:

eConnectType eConnect = new eConnectType();

MemoryStream memoryStream = new MemoryStream();

XmlSerializer xmlSerializer = new XmlSerializer(eConnect.GetType());

xmlSerializer.Serialize(memoryStream, eConnect);

memoryStream.Position = 0;

XmlDocument xmlDocument = new XmlDocument();

xmlDocument.Load(memoryStream);

memoryStream.Close();

econnectMethods.eConnect_EntryPoint(

connectionString, EnumTypes.ConnectionStringType.SqlClient,

xmlDocument.OuterXml, EnumTypes.SchemaValidationType.None,

string.Empty);

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Tuesday, September 20, 2011

Little Tricks With Report Writer Package Files

I remember when I figured out that I could open, in Notepad, a package file (Microsoft Dynamics GP-Tools-Customize-Customization Maintenance-Export) containing a report. I thought, hey, that's cool. And then I figured out that I could copy the "guts" of a report from one package to another in order to do something like....make the SOP Other Invoice Form look exactly like the SOP Blank Invoice Form. With that, I thought, hey, that is really cool! So, I thought I had it all figured out-- easy enough to do as long as the tables of the reports were pretty much the same.

But, then, my coworker Cindy Boersma asked if I knew I could make the SOP Blank History Invoice Form look exactly like the SOP Blank Invoice Form using the same method. Holy cow! No, I did not, although it all makes logical sense. Since all the information, including table names, is in the package file. So, here are the basic steps, adapted from Cindy's notes. As always, please make sure you either keep backups of the package files or the reports dictionary before attempting any of these steps to avoid the loss of your modifications :)

1. Export the report you want to use as the "basis" of the other report (Microsoft Dynamics GP-Tools-Customize-Customization Maintenance-Export)

2. Open the package file created using Notepad

3. At the top of the package file, you should see the component and report name as highlighted in the screenshot below

4. Simply edit these two (2) references to the report name to match the report that you wish to copy the package to (for example, SOP Blank History Invoice Form"). Check Report Writer if you have issues finding the report name (it needs to be the name used in Report Writer for the report, which is also the name that appears across on the top border of the Screen Output window when you print a report to the screen-- NOT the name that prints on the report itself, this is not always the report writer name).

5. Next, if the report you are copying to uses different tables (e.g., the SOP Blank History Invoice Form uses the SOP history tables while the SOP Blank Invoice Form uses the SOP work tables), you will need to use Edit-Replace in Notepad to make the substitutions. Now, in this case, it assumes that the field names in the tables are the same. So we find/replace SOP_LINE_WORK with SOP_LINE_HIST and SOP_HDR_WORK with SOP_HDR_HIST. And here are the results...

Not only did we replace the component and report identifiers, but the find/replace also updated the table references in the report package as well.

6. Now, we can import our package file back in to GP, Microsoft Dynamics GP-Tools-Customize-Customization Maintenance-Import. Remember, no other users can be in Dynamics GP when you complete this process (assuming you are accessing a shared reports dictionary).

And there you go. Creating a historical invoice that looks identical to the standard invoice in minutes! So cool. And why I love this job-- learn something new (even if it was sooo obvious) every day!

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a supervising consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

Monday, September 19, 2011

Using Custom Icons to Differentate GP Versions

I develop Dynamics GP customizations and integrations for alot of different customers, and currently they are running GP 9, GP 10, GP 2010, and GP 2010 R2 (thankfully nothing older than GP 9!). Most use only the core GP modules, while some use Project Accounting, and a few use Analytical Accounting. Because of this, I have to maintain several different versions of GP on multiple virtual servers.

This is a pretty standard situation, but one small annoyance I have had is that the GP 10 icon is the same as the GP 2010 icon. So if I have both versions installed on a server, I have to try and differentiate the icons on my start menu, task bar, and desktop so that I can tell which is GP 10 versus 2010. And then I have one machine with GP 2010 and a separate install of GP 2010 R2, so that is also fun.

I don't know why it didn't occur to me earlier, but tonight I thought I would try and create custom icons for each version of GP. If you are using Visual Studio 2008 or Visual Studio 2010, it's a pretty simple process.

I already have a standard Dynamics GP icon file that I created several years ago, or if you want to create your own, you can use an icon editor or extractor.

I just made a copy of the icon file, opened it in Visual Studio, and then added a basic "2010 R2" to the 32x32 icon, and just an "R2" to the 24x24 and 16x16 icons. I've made a similar one for GP 10. And now I can use the same technique to create one for my separate Analytical Accounting, Project Accounting, and Manufacturing installs.

Obviously desktop icons have a text label, but the custom icons are very helpful for the icons in the system tray.

Unfortunately, while writing this, I just discovered one very big limitation. The Visual Studio 2010 icon editor does not support editing of 32-bit (color depth) icons. Since I am using my custom icons on a virtual server that I access via RDP, the 16-bit icons are fine for me. But if you want to make 32-bit versions, you'll need a real icon editor, such as the very good Axialis Icon Workshop, or perhaps there is a free product available.

It appears that Axialis Icon Workshop includes a Visual Studio 2010 Add In, so that's a nice feature if you do much icon editing.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

This is a pretty standard situation, but one small annoyance I have had is that the GP 10 icon is the same as the GP 2010 icon. So if I have both versions installed on a server, I have to try and differentiate the icons on my start menu, task bar, and desktop so that I can tell which is GP 10 versus 2010. And then I have one machine with GP 2010 and a separate install of GP 2010 R2, so that is also fun.

I don't know why it didn't occur to me earlier, but tonight I thought I would try and create custom icons for each version of GP. If you are using Visual Studio 2008 or Visual Studio 2010, it's a pretty simple process.

I already have a standard Dynamics GP icon file that I created several years ago, or if you want to create your own, you can use an icon editor or extractor.

I just made a copy of the icon file, opened it in Visual Studio, and then added a basic "2010 R2" to the 32x32 icon, and just an "R2" to the 24x24 and 16x16 icons. I've made a similar one for GP 10. And now I can use the same technique to create one for my separate Analytical Accounting, Project Accounting, and Manufacturing installs.

Obviously desktop icons have a text label, but the custom icons are very helpful for the icons in the system tray.

Unfortunately, while writing this, I just discovered one very big limitation. The Visual Studio 2010 icon editor does not support editing of 32-bit (color depth) icons. Since I am using my custom icons on a virtual server that I access via RDP, the 16-bit icons are fine for me. But if you want to make 32-bit versions, you'll need a real icon editor, such as the very good Axialis Icon Workshop, or perhaps there is a free product available.

It appears that Axialis Icon Workshop includes a Visual Studio 2010 Add In, so that's a nice feature if you do much icon editing.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Friday, September 9, 2011

Credit Limits and Sales Process Holds

I just finished up a post for the BKD blog on sales process holds, and I thought I might spend a little time here on one the neat side benefits of using process holds. For those of you that are not familiar with them, sales process holds are user-definable holds that you can assign to Sales Order Processing transactions (Quotes, Orders, Invoices, etc) that can prevent the document from being printed, posted, fulfilled, and/or transferred. These holds are quite handy, as they can be applied to ranges of documents, individual documents, or even default when certain document types are used.

There is a bit of a "bonus" to using sales process holds in tandem with customer credit limits. You can actually specify a process hold to be automatically assigned to a document when the customer exceeds their credit limit. For example, let's say that your credit manager has to review all documents that exceed a customer's credit limit. By assigning the process hold automatically, the credit manager could complete his or her review and remove the holds on those documents that can continue to be processed.

The setup for this feature is quite simple. Start by setting up the process hold, Microsoft Dynamics GP-Tools-Setup-Sales-Process Holds.

There is a bit of a "bonus" to using sales process holds in tandem with customer credit limits. You can actually specify a process hold to be automatically assigned to a document when the customer exceeds their credit limit. For example, let's say that your credit manager has to review all documents that exceed a customer's credit limit. By assigning the process hold automatically, the credit manager could complete his or her review and remove the holds on those documents that can continue to be processed.

The setup for this feature is quite simple. Start by setting up the process hold, Microsoft Dynamics GP-Tools-Setup-Sales-Process Holds.

Enter the Process Hold ID and Description. Specify a Password if you want users to enter a password in order to remove the hold. And then mark the items you want to "Apply Hold To". Click Save.

Next, you need to specify the hold on the specify invoice and order types that should automatically have a credit limit hold applied. Go to Microsoft Dynamics GP-Tools-Setup-Sales-Sales Order Processing Setup. Click the Sales Document Setup button and choose either Invoice or Order.

Select the Order or Invoice ID, and then select the appropriate process hold in the Credit Limit Hold ID field. Click Save. Now, when you enter an order using this Order ID and the customer exceeds their credit limit, the process hold specified will be automatically assigned to the order. In order for this functionality to work, you CANNOT have a password specified for the "Exceed Credit Limit" option in Receivables Management Setup (Microsoft Dynamics GP-Tools-Setup-Sales-Receivables).

When entering an order that exceeds the customer's credit limit, a warning will be displayed. Choose Continue, and the hold will automatically be applied to the order when it is saved.

Note that the CREDIT process hold has automatically been applied. How easy is that (to borrow from the fabulous Ina Garten)?

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a supervising consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

Wednesday, September 7, 2011

Mysterious eConnect Error: The stored procedure does not exist. Watch your schemas!

I recently upgraded a Dynamics GP Visual Studio Tools AddIn from GP 9 from GP 2010. The AddIn reviews some GP transactions, performs some calculations, and then inserts new payroll transactions using eConnect.

The upgrade was straightforward and worked fine on my development server. It installed smoothly on the client server and looked like it was working...right up to the point where the following eConnect error occurred:

In this particular case, the AddIn was inserting an entire payroll batch, but the specific stored procedure in the error could have been any eConnect procedure.

I've never seen that type of error before, so I started with the obvious--I checked the SQL database to see if the procedure existed. It definitely did exist in the database.

I then thought that maybe it was a permission issue, so I confirmed that the eConnect domain user was setup as a SQL Login, and that an eConnect user was properly setup for the company database. I even checked the DYNGRP role to make sure that the stored procedure was listed with EXEC permissions.

Everything looked fine.

I did a little research on the error, and saw a post that recommended checking the database connection string. If the database was not specified properly, and the import was somehow referencing the master database or even DYNAMICS, naturally that would cause this type of error. So I added some debugging code, but the connection string looked fine--the proper GP company database was being referenced.

I was running out of options, so I dug out the Direct Document Sender, a tool used by GP support to troubleshoot eConnect issues. It allows you to specify the database connection info, select an XML file containing the eConnect data to be imported, and then it sends the XML document off to eConnect. This helps to rule out any bugs in your custom integration.

Interestingly, the Direct Document Sender received the same error message indicating that the stored procedure did not exist. Although it helped to rule out the VS Tools AddIn and its eConnect code, and it helped me realize that there was a lower level issue with the client's environment, I still didn't have any specific clues as to the real cause. I was thinking that maybe it was a problem with the eConnect procedures in the client's databases, or that perhaps something did not upgrade properly from GP 9 to GP 2010.

As a long shot, I ran the dbmaintenance.exe utility in the Dynamics GP application directory to recreate the Functions and Stored Procedures on the company database. That completed successfully, but when I ran the integration and the Direct Document Sender, both still received the same error.

As a final test, I wanted to get a baseline to test against in the client's environment, so I created the TWO / Fabrikam test company database. GP Utilities setup the company, and I then went into SQL Server Management Studio and added the eConnect login as a user on the TWO database.

To perform my test with the Direct Document Sender (which uses Windows authentication to access the SQL Server), I logged into the client's server as the eConnect domain user, just to make sure that no other user account variables were involved. I launched the Sender and started to setup the connection string, but when I went to select the database, TWO was not listed. I verified everything several more times, but TWO didn't show up.

I then logged out of the server and logged back in as the Administrator. When I did that, the TWO database did show up. Puzzled, I checked and rechecked the eConnect user for the TWO database, but it looked fine. For some reason I couldn't get TWO to appear in the database list.

Since this didn't make any sense, I went back to the SQL Server Login window for the eConnect user and checked the settings for at least the fifth time. While I was staring at the window, something caught my attention.

I scrolled to the right and saw that the user record for the TWO database had a Default Schema of dbo, but the records for the company databases had the eConnect user listed as the Default Schema.

The light bulb instantly came on and it all made sense. Well almost all.

If you aren't familiar with SQL Server Schemas, you should definitely read about them just enough to understand what they are. They are not used by GP, so you don't need to be an expert; however, because they are not used by GP, if someone happens to use them in a GP database, it will likely cause problems, so you will need to know just enough to identify, understand, and resolve the issue.

Because the eConnect SQL user was assigned a Default Schema other than dbo, when eConnect logged into the database and tried to execute the payroll stored procedure, it literally didn't exist. In fact nothing existed--no tables, no stored procedures, nothing.

I tried changing the Default Schema for the user back to dbo, but for some reason that didn't solve the problem. I had to delete the eConnect user and eConnect schema completely from all databases, and delete the eConnect login for good measure, and then recreate the login and users with the proper dbo default schema.

Once I did that, the TWO database showed up in Direct Document Sender, and sure enough, eConnect worked just fine. The AddIn immediately started working properly and was able to import payroll batches.

I'm not entirely sure how the eConnect schema got created on the SQL Server or how it was assigned as the Default Schema for the eConnect user on the company databases. And I don't understand why changing the Default Schema back to dbo didn't fix the problem. But in the end, it was easy enough to remove the users completely and recreate them.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

The upgrade was straightforward and worked fine on my development server. It installed smoothly on the client server and looked like it was working...right up to the point where the following eConnect error occurred:

The stored procedure 'taCreatePayrollBatchHeaderInsert' doesn't exist.

In this particular case, the AddIn was inserting an entire payroll batch, but the specific stored procedure in the error could have been any eConnect procedure.

I've never seen that type of error before, so I started with the obvious--I checked the SQL database to see if the procedure existed. It definitely did exist in the database.

I then thought that maybe it was a permission issue, so I confirmed that the eConnect domain user was setup as a SQL Login, and that an eConnect user was properly setup for the company database. I even checked the DYNGRP role to make sure that the stored procedure was listed with EXEC permissions.

Everything looked fine.

I did a little research on the error, and saw a post that recommended checking the database connection string. If the database was not specified properly, and the import was somehow referencing the master database or even DYNAMICS, naturally that would cause this type of error. So I added some debugging code, but the connection string looked fine--the proper GP company database was being referenced.

I was running out of options, so I dug out the Direct Document Sender, a tool used by GP support to troubleshoot eConnect issues. It allows you to specify the database connection info, select an XML file containing the eConnect data to be imported, and then it sends the XML document off to eConnect. This helps to rule out any bugs in your custom integration.

Interestingly, the Direct Document Sender received the same error message indicating that the stored procedure did not exist. Although it helped to rule out the VS Tools AddIn and its eConnect code, and it helped me realize that there was a lower level issue with the client's environment, I still didn't have any specific clues as to the real cause. I was thinking that maybe it was a problem with the eConnect procedures in the client's databases, or that perhaps something did not upgrade properly from GP 9 to GP 2010.

As a long shot, I ran the dbmaintenance.exe utility in the Dynamics GP application directory to recreate the Functions and Stored Procedures on the company database. That completed successfully, but when I ran the integration and the Direct Document Sender, both still received the same error.

As a final test, I wanted to get a baseline to test against in the client's environment, so I created the TWO / Fabrikam test company database. GP Utilities setup the company, and I then went into SQL Server Management Studio and added the eConnect login as a user on the TWO database.

To perform my test with the Direct Document Sender (which uses Windows authentication to access the SQL Server), I logged into the client's server as the eConnect domain user, just to make sure that no other user account variables were involved. I launched the Sender and started to setup the connection string, but when I went to select the database, TWO was not listed. I verified everything several more times, but TWO didn't show up.

I then logged out of the server and logged back in as the Administrator. When I did that, the TWO database did show up. Puzzled, I checked and rechecked the eConnect user for the TWO database, but it looked fine. For some reason I couldn't get TWO to appear in the database list.

Since this didn't make any sense, I went back to the SQL Server Login window for the eConnect user and checked the settings for at least the fifth time. While I was staring at the window, something caught my attention.

I scrolled to the right and saw that the user record for the TWO database had a Default Schema of dbo, but the records for the company databases had the eConnect user listed as the Default Schema.

The light bulb instantly came on and it all made sense. Well almost all.

If you aren't familiar with SQL Server Schemas, you should definitely read about them just enough to understand what they are. They are not used by GP, so you don't need to be an expert; however, because they are not used by GP, if someone happens to use them in a GP database, it will likely cause problems, so you will need to know just enough to identify, understand, and resolve the issue.

Because the eConnect SQL user was assigned a Default Schema other than dbo, when eConnect logged into the database and tried to execute the payroll stored procedure, it literally didn't exist. In fact nothing existed--no tables, no stored procedures, nothing.

I tried changing the Default Schema for the user back to dbo, but for some reason that didn't solve the problem. I had to delete the eConnect user and eConnect schema completely from all databases, and delete the eConnect login for good measure, and then recreate the login and users with the proper dbo default schema.

Once I did that, the TWO database showed up in Direct Document Sender, and sure enough, eConnect worked just fine. The AddIn immediately started working properly and was able to import payroll batches.

I'm not entirely sure how the eConnect schema got created on the SQL Server or how it was assigned as the Default Schema for the eConnect user on the company databases. And I don't understand why changing the Default Schema back to dbo didn't fix the problem. But in the end, it was easy enough to remove the users completely and recreate them.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Friday, September 2, 2011

eConnect 2010: CreateEntity vs. CreateTransactionEntity

If you develop eConnect integrations, you probably know by now that with eConnect 2010, Microsoft changed the method used to submit transactions to eConnect.

With GP 10 and earlier, there was a single method called "eConnect_EntryPoint". Simple and easy, it accepted all of the eConnect document types.

With GP 2010, there are now two different methods. There is now a method called "CreateEntity", and a second called "CreateTransactionEntity".

Although I have one guess, I haven't asked and don't really know why this change was made, or what the rationale was behind implementing two methods--I just hope that some thought was put into it, and I hope that there was some benefit behind the scenes.

I hope that because it's slightly annoying to have to make two different calls now. By no means a big deal, but just slightly annoying, especially if you are upgrading from eConnect 9 or 10. You have to review your code and make sure that your code to create customers calls CreateEntity, and your code that imports invoices calls CreateTransactionEntity.

The eConnect 2010 Programmer's Guide provides this mediocre and slightly vague explanation the two new methods:

So, this is fine for obvious record types like a vendor vs. a voucher, but what if I create a new batch using SMTransactionBatchType? Is a batch an "Entity", or a "TransactionEntity"? That starts to become an existential question, because the current eConnect documentation offers no guidance when discussing each of the transaction types. You're stuck trying both methods to figure out which one to use (hint--the one that causes your app to crash is the wrong one).

With the griping aside, there is one last thing to pay attention to. Okay, it's really one last gripe.

Unless you paid very careful attention, you probably missed one critical difference between CreateEntity and CreateTransactionEntity. Do you know what it is?

They have different names obviously, but they both accept the same parameters, and they both seem to function in the same way. Read the last sentence of each of the paragraphs from the help file quoted above.

"If the operation succeeds, the method returns True as a boolean value."

"If the operation succeeds, the method returns an XML string that represents the eConnect XML document that was created."

WHY????

Despite having done at least a dozen eConnect 2010 integrations, I apparently hadn't paid close enough attention to that, so I discovered this the hard way: By having to submit a support case. I had upgraded an integration from GP 10, and when I had to create my two eConnect methods, I created a CreateEntity method, then copied that to do a CreateTransactionEntity method. I just changed the eConnect method name and that was it. Except that it wasn't.

I forgot to change my return variable from a bool to a string. When you make that mistake, you will get this error:

After inserting the transaction, eConnect returns the XML string, but the code fails when it tries to send that into your boolean return variable.

Strangely, the .NET compiler didn't catch the mistake, so I thank Chris Roehrich at GP support for pointing out my mistake.

UPDATE: I just figured out why the .NET compiler didn't catch the problem. This particular eConnect upgrade was the first time I had upgraded an old VB eConnect integration to GP 2010. It seems that the VB compiler does not detect the type mismatch. I just tried the same thing in C#, and the compiler picks it up right away. All of my previous eConnect 2010 integrations and upgrades have been in C#, which is why I never had the issue before.

Why they couldn't just make both methods have the same return type...well, again, I hope there was a some thought put into it and there is a reason and a benefit...

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified IT Professional in Los Angeles. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

With GP 10 and earlier, there was a single method called "eConnect_EntryPoint". Simple and easy, it accepted all of the eConnect document types.

With GP 2010, there are now two different methods. There is now a method called "CreateEntity", and a second called "CreateTransactionEntity".

Although I have one guess, I haven't asked and don't really know why this change was made, or what the rationale was behind implementing two methods--I just hope that some thought was put into it, and I hope that there was some benefit behind the scenes.

I hope that because it's slightly annoying to have to make two different calls now. By no means a big deal, but just slightly annoying, especially if you are upgrading from eConnect 9 or 10. You have to review your code and make sure that your code to create customers calls CreateEntity, and your code that imports invoices calls CreateTransactionEntity.

The eConnect 2010 Programmer's Guide provides this mediocre and slightly vague explanation the two new methods:

CreateEntity: Creates a record using information from an eConnect XML document. Use CreateEntity to add data entities like customers or vendors. You specify the type of record to create with a parameter that represents an eConnect XML document. If the operation succeeds, the method returns True as a boolean value.

CreateTransactionEntity: Create a transaction using information from an eConnect XML document. Use CreateTransactionEntity for transaction documents like sales orders or inventory transactions. You specify the type of transaction to create with a parameter that represents an eConnect XML document. If the operation succeeds, the method returns an XML string that represents the eConnect XML document that was created.

So, this is fine for obvious record types like a vendor vs. a voucher, but what if I create a new batch using SMTransactionBatchType? Is a batch an "Entity", or a "TransactionEntity"? That starts to become an existential question, because the current eConnect documentation offers no guidance when discussing each of the transaction types. You're stuck trying both methods to figure out which one to use (hint--the one that causes your app to crash is the wrong one).

With the griping aside, there is one last thing to pay attention to. Okay, it's really one last gripe.

Unless you paid very careful attention, you probably missed one critical difference between CreateEntity and CreateTransactionEntity. Do you know what it is?

They have different names obviously, but they both accept the same parameters, and they both seem to function in the same way. Read the last sentence of each of the paragraphs from the help file quoted above.

"If the operation succeeds, the method returns True as a boolean value."

"If the operation succeeds, the method returns an XML string that represents the eConnect XML document that was created."

WHY????