It is a universal truth that we people are complainers. We complain about missing functionality here, broken functionality there, and it seems like we are never happy with the tools we have. Call it perfectionism, call is realism, I am as guilty of it as the next person.

But this week I was reminded of the usefulness of Modifier, a tool that is often lumped in with Report Writer as having limited usefulness and causing frustration. I think, as is often the case, the devil is in the details. Proper scoping initially can ensure the proper development tool is selected for the project. And for this project, the use of Modifier decreased the cost and maintainance of a previous Dexterity based customization.

When I was initially given the Dexterity customization to review, I noticed how basic it was. Literally 6 modified windows, all based on existing GP windows. No reports. No procedures. Nothing else. And no source code, meaning that whatever road we took it would most likely involve recreating the customization. In looking at the modified windows, the modifications we all cosmetic. Changing the displayed size of fields (not the field itself) and rearranging headers. I am sure the developer bloggers out there can add to my impression, but it seems to me that someone knew Dexterity so that is what they reached for as a tool. Not the worst reason to pick a customization tool, but definitely not the only factor to consider.

So, based on the fact that the modifications were cosmetic, Modifier was my tool of choice. Easy to use, I modified the 6 windows quickly and easily. No concerns about source code, additional dictionaries to install, etc. No Dexterity (not that Dex is bad, but it does mean involving a developer each time) And when it comes to upgrades, the process is fairly simple. And, if the window changes and upgrading is not possible, it is easy enough to recreate now that we have documentation of the customization.

I recognize that Modifier may not be the best choice when getting in to heavy customization, but for cosmetic adjustments it works just great. And with Business Ready Licensing, clients don't even have to own Modifier to run the modifications you create. They just need the Customization Site License which is part of BRL.

So the lesson here, I think, is pick the right tool for the job and save the client money while saving time now and in the future. I know I have cited this white paper before, but Choosing A Development Tool is a worthwhile read for both consultants and customers who want to take ownership of their development projects.

My blog has moved! Please visit the new blog at: https://blog.steveendow.com/ I will no longer be posting to Dynamics GP Land, and all new posts will be at https://blog.steveendow.com Thanks!

Friday, October 29, 2010

Thursday, October 28, 2010

What Implementation Methodology? I Think Mine Might Be Busted.

Christina: Steve and I don't do a lot of collaborative posts; it just takes a bit too much coordination :) But here is one that came out of many discussions we have had over the years. We all have war stories, things that have gone bad fast. Sometimes it's our fault, sometimes it's not. But statistics support that MOST of the time it is the system's (methodology’s) fault. A broken methodology will repeatedly put even the best consultants in frustrating situations. Here are just a handful of examples where Sure Step, or any formal implementation methodology, could avoid frustration and disappointment.

Steve: A few times I've received a call from a partner asking for assistance--but with a twist. It starts out with a seemingly normal request for assistance with a Dynamics GP integration or customization. Then I'm asked if I can work on the project "right away". And then I'm asked if I can discount my billing rate.

That's when the alarm bells go off. On subsequent calls, I start to learn the project has some serious issues, and I'm being brought in at the last minute to try and salvage an integration or customization that someone didn't complete or is broken.

Based on two of these experiences, I thought I would give Christina, a SureStep Guru, a challenge. I'll document some observations of a few projects-in-crisis that I've worked on, and she will map the symptoms to elements of the Sure Step methodology that might have helped avoid the breakdowns.

Scenario 1: "Steve, can you help us with this integration ASAP? We have some code that a developer at an offshore firm wrote, but it doesn't seem to work and they haven't returned any calls or e-mails in 3 weeks, when the development firm said they were transitioning to a new developer. Oh, and can you do us a favor and drop your rates 40%? We're already way over budget, are weeks behind schedule, our go live is in two weeks, and the client is very upset that the integration doesn't work and can't be tested. We're relying on you to help us out."

True story! With friends like these, who needs enemies?

So Christina, how could Sure Step have helped this partner and customer? (and me!)

Solution 1: First, if we were to open Sure Step, and look under Project Types and Implementation Phases for an Enterprise Project Type, we find activities that could address the scenario above. Selecting an activity will display guidance, templates, and other resources in the right hand pane.

First, under 1.1.2, we see the project management discipline of Risk Management. Active risk management should have identified the potential risks of using offshore resources. And while we are talking about PM disciplines, how about Communications Management (1.1.3), which would include Project Performance and Status Reporting that should have identified potential budget issues before they actually occurred.

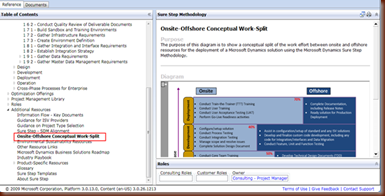

Now, if we skip down further in Sure Step, under Additional Resources, we find guidance on an Onsite/Offshore Conceptual Work Split. Was this discussed with the offshore developers? Were standards discussed and then managed proactively following the disciplines we discussed above?

Now, back under Project Types and Implementation Phases, in the Design Phase, there are also a number of activities related to customization design.

Where is the functional design in the scenario above? Was it signed off on by the customer? Did the developer create a technical design? Where is it? How about testing? Who did it and when? All of these set expectations of what the design includes, and make it easier to transition work when a resource is no longer available. These activities may be the responsibility of the developer and other consultants, but with active project management we can easily identify when these tasks don’t happen and manage the outcome.

Scenario 2: "Hey Steve, I hope you are available right away, because we really need your help. We were working with a third party company to help our client perform a multi-year detailed historical data conversion from their legacy accounting system to Dynamics GP, including project accounting transactions that will be migrated to Analytical Accounting transactions in GP. The vendor was supposed to deliver all of the converted data last Friday, but they called us this weekend to say that they can't read the source data and won't be able to deliver the GP data. We're hoping you can help us figure out how to extract the data from their old system and then import it into GP in the next 4 days since we're behind schedule. The client is not happy and we have already reduced our rates, so can you reduce your rate? Oh, and I had an expectation that this could all be done in less than 10 hours, because the client won't be happy having to pay much more for this conversion since we're already far over budget--will 10 hours be enough?"

Seriously, I can't make this stuff up. I've always wondered how people can keep a straight face while saying both "We really really need you to rescue us from a disaster" and "Can you dramatically lower your billing rate because we're over budget" in the same breath.

Christina, the stage is yours...

Solution 2: Well, this seems like a project with no analysis phase, no design phase, no development phase, just a deployment and operation phase. Sure Step prescribes 5 phases to an implementation project (the first phase, Diagnostic, is a pre-sales phase), and all projects do have all phases (although the length of each phase can vary based on the project).

Activities in Sure Step are laid out by phase, as shown in the screenshot below. There are also a number of cross phase processes, which are activities (like Quality and Testing) which span multiple phases. By following this structure, we can ensure that tasks are completed in a logic order and that activities do not occur without the proper planning.

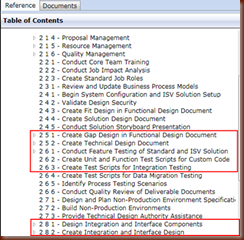

The Analysis phase is the information-gathering phase, and would include tasks like gathering data migration requirements and developing testing standards. While the Design phase includes activities for the functional and technical design of data migration include the tasks outlined in the screenshot below.

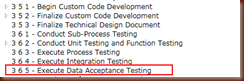

By completing Analysis and Design BEFORE actual development is needed, we can avoid the surprises regarding how to extract the data and the required format for import in to the system. Sure Step uses this measured approach specifically to discourage skipping right to development and deployment. And in the case of the scenario above, it seems that development was combined with deployment, leaving out critical Development phase tasks like data acceptance testing.

Scenario 3: "Steve, I need you to modify these 6 Dynamics GP and ISV windows to selectively disable certain buttons and certain fields for certain users. I don't want certain users accidentally changing or deleting data on these windows, so let's disable the Delete and Save buttons, and then make certain fields read only just in case. Hmmm, can you password protect a few fields as well? And Dynamics GP doesn't work the same way as their 20 year old custom AS400 system that is being replaced, so we need you to create a few custom windows to replicate their AS400 screens, and then modify the transaction workflow to match their old system. After you are done with that, we can talk about how we can get the Dynamics GP reports to look exactly like the AS400 reports they are currently using. I know that none of these changes are included in the statement of work and that we had 10 people participating in 2 weeks of system design meetings agree that we wouldn't need any customizations or modifications, but I think we really need Dynamics GP to work just like the AS400 system. Let's call these change request number 97 and 98 for now. I'll reserve numbers 99 through 120 for the reporting."

Christina, I just deliver them on a silver platter, and rely on you to work your Sure Step magic.

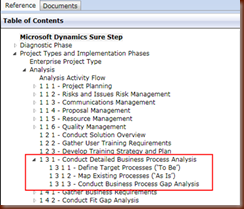

Solution 3: Oooookay. Well, although this has an element of lack of functional design to it, I think the real underlying issue is Detailed Business Process Analysis. If we look at the Analysis phase activity for Conduct Detailed Business Process Analysis (1.3.1), we see three key tasks.

Sure Step advocates for this three step process that begins with "Define Target Process (To Be)" and then moves to "Map Existing Processes (As Is)" and then analyzing any gaps. And preceding this, we can also include (per Sure Step) a solutions overview to acquaint keys users with how the systems works "out of the box". Why do we do this? Why do we start with Defining Target Processes? Because we are changing systems which means we will be changing our processes. I know that may seem like an obvious statement, but it is not unusual for organizations and individuals to react negatively to the potential for change. That is why Sure Step even has guidance in the Project Management Library (and throughout the activities as well) regarding Organization Change Management (OCM).

The guidance in Sure Step related to OCM will assist in the project team in helping the client navigate and manage the necessary organizational change that comes with implementing a new software system. Stories like the one above suggest to me that Organization Change Management was not considered as an important activity, and that the client is scared in to wanting to recreate their existing system. Personally, I take the word "consultant" seriously. We are there to consult, and advise, on the best practice. And Sure Step helps you do that in a way that can improve results, minimize risk, and increase project success.

Well, hope you found this scenario/solution format helpful. As always, we love to hear from any of our readers out there. And I love to hear about how you are using Sure Step (even in bits and pieces) to better your processes.

Steve: A few times I've received a call from a partner asking for assistance--but with a twist. It starts out with a seemingly normal request for assistance with a Dynamics GP integration or customization. Then I'm asked if I can work on the project "right away". And then I'm asked if I can discount my billing rate.

That's when the alarm bells go off. On subsequent calls, I start to learn the project has some serious issues, and I'm being brought in at the last minute to try and salvage an integration or customization that someone didn't complete or is broken.

Based on two of these experiences, I thought I would give Christina, a SureStep Guru, a challenge. I'll document some observations of a few projects-in-crisis that I've worked on, and she will map the symptoms to elements of the Sure Step methodology that might have helped avoid the breakdowns.

Scenario 1: "Steve, can you help us with this integration ASAP? We have some code that a developer at an offshore firm wrote, but it doesn't seem to work and they haven't returned any calls or e-mails in 3 weeks, when the development firm said they were transitioning to a new developer. Oh, and can you do us a favor and drop your rates 40%? We're already way over budget, are weeks behind schedule, our go live is in two weeks, and the client is very upset that the integration doesn't work and can't be tested. We're relying on you to help us out."

True story! With friends like these, who needs enemies?

So Christina, how could Sure Step have helped this partner and customer? (and me!)

Solution 1: First, if we were to open Sure Step, and look under Project Types and Implementation Phases for an Enterprise Project Type, we find activities that could address the scenario above. Selecting an activity will display guidance, templates, and other resources in the right hand pane.

Now, if we skip down further in Sure Step, under Additional Resources, we find guidance on an Onsite/Offshore Conceptual Work Split. Was this discussed with the offshore developers? Were standards discussed and then managed proactively following the disciplines we discussed above?

Now, back under Project Types and Implementation Phases, in the Design Phase, there are also a number of activities related to customization design.

Where is the functional design in the scenario above? Was it signed off on by the customer? Did the developer create a technical design? Where is it? How about testing? Who did it and when? All of these set expectations of what the design includes, and make it easier to transition work when a resource is no longer available. These activities may be the responsibility of the developer and other consultants, but with active project management we can easily identify when these tasks don’t happen and manage the outcome.

Scenario 2: "Hey Steve, I hope you are available right away, because we really need your help. We were working with a third party company to help our client perform a multi-year detailed historical data conversion from their legacy accounting system to Dynamics GP, including project accounting transactions that will be migrated to Analytical Accounting transactions in GP. The vendor was supposed to deliver all of the converted data last Friday, but they called us this weekend to say that they can't read the source data and won't be able to deliver the GP data. We're hoping you can help us figure out how to extract the data from their old system and then import it into GP in the next 4 days since we're behind schedule. The client is not happy and we have already reduced our rates, so can you reduce your rate? Oh, and I had an expectation that this could all be done in less than 10 hours, because the client won't be happy having to pay much more for this conversion since we're already far over budget--will 10 hours be enough?"

Seriously, I can't make this stuff up. I've always wondered how people can keep a straight face while saying both "We really really need you to rescue us from a disaster" and "Can you dramatically lower your billing rate because we're over budget" in the same breath.

Christina, the stage is yours...

Solution 2: Well, this seems like a project with no analysis phase, no design phase, no development phase, just a deployment and operation phase. Sure Step prescribes 5 phases to an implementation project (the first phase, Diagnostic, is a pre-sales phase), and all projects do have all phases (although the length of each phase can vary based on the project).

The Analysis phase is the information-gathering phase, and would include tasks like gathering data migration requirements and developing testing standards. While the Design phase includes activities for the functional and technical design of data migration include the tasks outlined in the screenshot below.

By completing Analysis and Design BEFORE actual development is needed, we can avoid the surprises regarding how to extract the data and the required format for import in to the system. Sure Step uses this measured approach specifically to discourage skipping right to development and deployment. And in the case of the scenario above, it seems that development was combined with deployment, leaving out critical Development phase tasks like data acceptance testing.

Scenario 3: "Steve, I need you to modify these 6 Dynamics GP and ISV windows to selectively disable certain buttons and certain fields for certain users. I don't want certain users accidentally changing or deleting data on these windows, so let's disable the Delete and Save buttons, and then make certain fields read only just in case. Hmmm, can you password protect a few fields as well? And Dynamics GP doesn't work the same way as their 20 year old custom AS400 system that is being replaced, so we need you to create a few custom windows to replicate their AS400 screens, and then modify the transaction workflow to match their old system. After you are done with that, we can talk about how we can get the Dynamics GP reports to look exactly like the AS400 reports they are currently using. I know that none of these changes are included in the statement of work and that we had 10 people participating in 2 weeks of system design meetings agree that we wouldn't need any customizations or modifications, but I think we really need Dynamics GP to work just like the AS400 system. Let's call these change request number 97 and 98 for now. I'll reserve numbers 99 through 120 for the reporting."

Christina, I just deliver them on a silver platter, and rely on you to work your Sure Step magic.

Solution 3: Oooookay. Well, although this has an element of lack of functional design to it, I think the real underlying issue is Detailed Business Process Analysis. If we look at the Analysis phase activity for Conduct Detailed Business Process Analysis (1.3.1), we see three key tasks.

Sure Step advocates for this three step process that begins with "Define Target Process (To Be)" and then moves to "Map Existing Processes (As Is)" and then analyzing any gaps. And preceding this, we can also include (per Sure Step) a solutions overview to acquaint keys users with how the systems works "out of the box". Why do we do this? Why do we start with Defining Target Processes? Because we are changing systems which means we will be changing our processes. I know that may seem like an obvious statement, but it is not unusual for organizations and individuals to react negatively to the potential for change. That is why Sure Step even has guidance in the Project Management Library (and throughout the activities as well) regarding Organization Change Management (OCM).

The guidance in Sure Step related to OCM will assist in the project team in helping the client navigate and manage the necessary organizational change that comes with implementing a new software system. Stories like the one above suggest to me that Organization Change Management was not considered as an important activity, and that the client is scared in to wanting to recreate their existing system. Personally, I take the word "consultant" seriously. We are there to consult, and advise, on the best practice. And Sure Step helps you do that in a way that can improve results, minimize risk, and increase project success.

Well, hope you found this scenario/solution format helpful. As always, we love to hear from any of our readers out there. And I love to hear about how you are using Sure Step (even in bits and pieces) to better your processes.

Wednesday, October 27, 2010

eConnect 2010 Integration Service Won't Start Due To Port 80 Conflict

By Steve Endow

Last week I deployed an eConnect 2010 integration at a client. We first installed it on the SQL Server for testing, and everything worked fine.

We then deployed it on one of the two load-balanced Citrix servers that the Dynamics GP users utilize, and that went smoothly. But when we installed and tested it on the second Citrix server,we received the now familiar "there was no endpoint listening at net.pipe" error. Easy enough to resolve, no problem!

So I open the Services applet, and sure enough, the eConnect 2010 service was not running. But when I tried to start the service, I received an error that it failed to start. Hmmm.

I checked the Windows Event Log and found two errors, one of which was helpful.

Input parameters:

Exception type:

System.ServiceModel.AddressAlreadyInUseException

Exception message:

HTTP could not register URL http://+:80/Microsoft/Dynamics/GP/eConnect/mex/ because TCP port 80 is being used by another application.

Exception type:

System.Net.HttpListenerException

Exception message:

The process cannot access the file because it is being used by another process

So this seemed to be telling me that the eConnect 2010 service was trying to setup a listener on port 80, but something else was already using port 80. We initially thought that it might be IIS, but after stopping the IIS web site and IIS services, I used the netstat command to confirm that something was still using port 80.

After re-reading the eConnect 2010 documentation and not finding any references to http or port 80, we submitted a support case. Support responded promptly and offered two workarounds.

We chose the simpler of the two solutions, which was to change a parameter in the eConnect service config file. To do this, locate the eConnect web services configuration file, which is usually installed at:

C:\Program Files\Microsoft Dynamics\eConnect 11.0\Service\ Microsoft.Dynamics.GP.eConnect.Service.exe.config

Open this file using a text or XML file editor and change the httpGetEnabled parameter to false.

So what in the world does this mean?

Changing the httpGetEnabled parameter to false disables the HTTP publishing of the Web Services Description Language (WSDL) metadata. Developers should understand what this means--for anyone else, don't worry about it if it doesn't mean anything.

We learned that by default, the eConnect 2010 service publishes its WSDL using both HTTP and named pipes (net.pipe). The HTTP method can be viewed using Internet Explorer, while the net.pipe method uses the WCF 'metadata exchange' (mex) endpoint, which can be browsed using the WCF metadata tool, svcutil.exe. And apparently Visual Studio can access the WSDL using either of these methods.

The workaround above, setting httpGetEnabled =false, turns off the HTTP WSDL publishing, thereby eliminating the need for eConnect to use port 80. The eConnect 2010 service will still provide the net.pipe WSDL in case it is necessary. I don't yet know if the WSDL is even used for a compiled / deployed eConnect integration, or if is only necessary to setup the service reference in Visual Studio--that will have to be a separate homework assignment.

I suspect that the httpGetEnabled=false option should work just fine for most customers. In case it isn't a viable option, the second workaround that support provided was to change the port that the eConnect service uses to publish the HTTP WSDL. That was slightly more involved, and wasn't necessary for us, so we chose not to use that approach. If anyone is interested in knowing that method, i.e. you have a development server that is already using port 80, let me know and I'll write a separate post on that method.

2/21/11 Update: Here are the instructions provided by MS Support to change the port number for the HTTP WSDL.

Last week I deployed an eConnect 2010 integration at a client. We first installed it on the SQL Server for testing, and everything worked fine.

We then deployed it on one of the two load-balanced Citrix servers that the Dynamics GP users utilize, and that went smoothly. But when we installed and tested it on the second Citrix server,we received the now familiar "there was no endpoint listening at net.pipe" error. Easy enough to resolve, no problem!

So I open the Services applet, and sure enough, the eConnect 2010 service was not running. But when I tried to start the service, I received an error that it failed to start. Hmmm.

I checked the Windows Event Log and found two errors, one of which was helpful.

Input parameters:

Exception type:

System.ServiceModel.AddressAlreadyInUseException

Exception message:

HTTP could not register URL http://+:80/Microsoft/Dynamics/GP/eConnect/mex/ because TCP port 80 is being used by another application.

Exception type:

System.Net.HttpListenerException

Exception message:

The process cannot access the file because it is being used by another process

So this seemed to be telling me that the eConnect 2010 service was trying to setup a listener on port 80, but something else was already using port 80. We initially thought that it might be IIS, but after stopping the IIS web site and IIS services, I used the netstat command to confirm that something was still using port 80.

After re-reading the eConnect 2010 documentation and not finding any references to http or port 80, we submitted a support case. Support responded promptly and offered two workarounds.

We chose the simpler of the two solutions, which was to change a parameter in the eConnect service config file. To do this, locate the eConnect web services configuration file, which is usually installed at:

C:\Program Files\Microsoft Dynamics\eConnect 11.0\Service\ Microsoft.Dynamics.GP.eConnect.Service.exe.config

Open this file using a text or XML file editor and change the httpGetEnabled parameter to false.

So what in the world does this mean?

Changing the httpGetEnabled parameter to false disables the HTTP publishing of the Web Services Description Language (WSDL) metadata. Developers should understand what this means--for anyone else, don't worry about it if it doesn't mean anything.

We learned that by default, the eConnect 2010 service publishes its WSDL using both HTTP and named pipes (net.pipe). The HTTP method can be viewed using Internet Explorer, while the net.pipe method uses the WCF 'metadata exchange' (mex) endpoint, which can be browsed using the WCF metadata tool, svcutil.exe. And apparently Visual Studio can access the WSDL using either of these methods.

The workaround above, setting httpGetEnabled =false, turns off the HTTP WSDL publishing, thereby eliminating the need for eConnect to use port 80. The eConnect 2010 service will still provide the net.pipe WSDL in case it is necessary. I don't yet know if the WSDL is even used for a compiled / deployed eConnect integration, or if is only necessary to setup the service reference in Visual Studio--that will have to be a separate homework assignment.

I suspect that the httpGetEnabled=false option should work just fine for most customers. In case it isn't a viable option, the second workaround that support provided was to change the port that the eConnect service uses to publish the HTTP WSDL. That was slightly more involved, and wasn't necessary for us, so we chose not to use that approach. If anyone is interested in knowing that method, i.e. you have a development server that is already using port 80, let me know and I'll write a separate post on that method.

2/21/11 Update: Here are the instructions provided by MS Support to change the port number for the HTTP WSDL.

Step 1: Edit the Microsoft.Dynamics.GP.eConnect.Service.exe.config file located under the following typical location: C:\Program Files\Microsoft Dynamics\eConnect 11.0\Service.exe. Change the httpGetUrl to have a different port, such as port 83:

< serviceBehaviors >

< behavior name="eConnectServiceBehavior" >

< !--Leaves this to true in order to receive Exeption information in eConnect-- >

< !--This settings turns on Metadata Generation. Metadata allows for consumers to add service references in Visual Studio-- >

< serviceMetadata httpGetEnabled="true" httpGetUrl="http://localhost:83/Microsoft/Dynamics/GP/eConnect/mex"/ >

< /behavior >

< /serviceBehaviors >

Step 2: Use "netsh http add urlacl url=http://+:port_number/ user=DOMAIN\UserName" at a Command Prompt to assign the HTTP namespace to the required user account that the "eConnect 2010 service" runs under. The below syntax is what I used on my computer to configure it for port 83. Note the ADOMAIN\GPService account is the Windows account running the "eConnect 2010 service" under Administrative Tools - Services.

netsh http add urlacl url=http://+:83/ user=ADOMAIN\gpservice

Steve Endow is a Dynamics GP Certified Trainer and Dynamics

GP Certified IT Professional in Los Angeles. He is also the owner of

Precipio Services, which provides Dynamics GP integrations, customizations, and

automation solutions.

Tuesday, October 26, 2010

eConnect Error: Requested registry access is not allowed

While testing a new eConnect 9 integration for a client, I received the following error when trying to send my XML data to eConnect:

Requested registry access is not allowed.

After Googling this error, I recalled that I've probably run into this somewhere in the past, but can't recall ever having the issue with eConnect.

I found this thread which suggested that the problem was due to the application or eConnect being unable to write to the event log. Okay, I can see that.

So I open Event Viewer, and sure enough, there is no eConnect event log. But I know that I've developed at least one other eConnect integration for this client, and I'm sure that eConnect is installed and working.

Assuming that the missing Event Log was the issue, I found some KB articles that suggested turning off UAC, as that can prevent a .NET application from creating an event log on newer OSs like Server 2008, Windows Vista, or Windows 7. Well, the virtual server that I use for this client is Server 2003, so UAC can't be the cause.

I then found this MS support article and this KB article that both offer some possible causes and additional information about the problem. I didn't feel like spending the time to determine the exact cause of the missing event log, but the articles told me the location of the Event Log registry entries. Just as my server has no eConnect event log, the registry is also missing any eConnect entries in the registry, as one would expect.

I didn't want to figure out if the eConnect installation created the event log, or whether a call to eConnect would create it on the fly--I just wanted to create the registry entries and get the log setup. Rather than trying to manually create registry keys, I dug through my pile of virtual servers and found an old one that had a GP 9 and GP 10 installation, with both versions of eConnect. That server had an eConnect event log, and corresponding registry entries.

So I exported the eConnect key from HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Eventlog to a .reg file, copied that to my problem server, and imported the .reg file.

Here is what my exported registry file contained:

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Eventlog\eConnect]

"Retention"=dword:00000000

"MaxSize"=dword:01000000

"File"=hex(2):25,00,53,00,79,00,73,00,74,00,65,00,6d,00,52,00,6f,00,6f,00,74,\

00,25,00,5c,00,53,00,79,00,73,00,74,00,65,00,6d,00,33,00,32,00,5c,00,43,00,\

6f,00,6e,00,66,00,69,00,67,00,5c,00,65,00,43,00,6f,00,6e,00,6e,00,65,00,63,\

00,74,00,2e,00,65,00,76,00,74,00,00,00

"Sources"=hex(7):4d,00,69,00,63,00,72,00,6f,00,73,00,6f,00,66,00,74,00,2e,00,\

47,00,72,00,65,00,61,00,74,00,50,00,6c,00,61,00,69,00,6e,00,73,00,2e,00,65,\

00,43,00,6f,00,6e,00,6e,00,65,00,63,00,74,00,00,00,65,00,43,00,6f,00,6e,00,\

6e,00,65,00,63,00,74,00,00,00,00,00

"CustomSD"="O:BAG:SYD:(D;;0xf0007;;;AN)(D;;0xf0007;;;BG)(A;;0xf0007;;;SY)(A;;0x7;;;BA)(A;;0x7;;;SO)(A;;0x3;;;IU)(A;;0x3;;;SU)(A;;0x3;;;S-1-5-3)"

"AutoBackupLogFiles"=dword:00000000

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Eventlog\eConnect\eConnect]

"EventMessageFile"="c:\\WINDOWS\\Microsoft.NET\\Framework\\v1.1.4322\\EventLogMessages.dll"

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Eventlog\eConnect\Microsoft.GreatPlains.eConnect]

"EventMessageFile"="c:\\WINDOWS\\Microsoft.NET\\Framework\\v1.1.4322\\EventLogMessages.dll"

Note that you need to have the EventLogMessages.dll file located at C:\WINDOWS\Microsoft.NET\Framework\v1.1.4322\EventLogMessages.dll.

Like magic, the registry access error went away, and I was able to get the actual eConnect error for the data I was trying to import.

This was with eConnect 9, so this resolution is probably pretty dated, and presumably this is a pretty rare server-specific problem, but in case anyone else runs into it, this approach seemed to shortcut the troubleshooting process and let me jump right to a simple resolution.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Requested registry access is not allowed.

After Googling this error, I recalled that I've probably run into this somewhere in the past, but can't recall ever having the issue with eConnect.

I found this thread which suggested that the problem was due to the application or eConnect being unable to write to the event log. Okay, I can see that.

So I open Event Viewer, and sure enough, there is no eConnect event log. But I know that I've developed at least one other eConnect integration for this client, and I'm sure that eConnect is installed and working.

Assuming that the missing Event Log was the issue, I found some KB articles that suggested turning off UAC, as that can prevent a .NET application from creating an event log on newer OSs like Server 2008, Windows Vista, or Windows 7. Well, the virtual server that I use for this client is Server 2003, so UAC can't be the cause.

I then found this MS support article and this KB article that both offer some possible causes and additional information about the problem. I didn't feel like spending the time to determine the exact cause of the missing event log, but the articles told me the location of the Event Log registry entries. Just as my server has no eConnect event log, the registry is also missing any eConnect entries in the registry, as one would expect.

I didn't want to figure out if the eConnect installation created the event log, or whether a call to eConnect would create it on the fly--I just wanted to create the registry entries and get the log setup. Rather than trying to manually create registry keys, I dug through my pile of virtual servers and found an old one that had a GP 9 and GP 10 installation, with both versions of eConnect. That server had an eConnect event log, and corresponding registry entries.

So I exported the eConnect key from HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Eventlog to a .reg file, copied that to my problem server, and imported the .reg file.

Here is what my exported registry file contained:

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Eventlog\eConnect]

"Retention"=dword:00000000

"MaxSize"=dword:01000000

"File"=hex(2):25,00,53,00,79,00,73,00,74,00,65,00,6d,00,52,00,6f,00,6f,00,74,\

00,25,00,5c,00,53,00,79,00,73,00,74,00,65,00,6d,00,33,00,32,00,5c,00,43,00,\

6f,00,6e,00,66,00,69,00,67,00,5c,00,65,00,43,00,6f,00,6e,00,6e,00,65,00,63,\

00,74,00,2e,00,65,00,76,00,74,00,00,00

"Sources"=hex(7):4d,00,69,00,63,00,72,00,6f,00,73,00,6f,00,66,00,74,00,2e,00,\

47,00,72,00,65,00,61,00,74,00,50,00,6c,00,61,00,69,00,6e,00,73,00,2e,00,65,\

00,43,00,6f,00,6e,00,6e,00,65,00,63,00,74,00,00,00,65,00,43,00,6f,00,6e,00,\

6e,00,65,00,63,00,74,00,00,00,00,00

"CustomSD"="O:BAG:SYD:(D;;0xf0007;;;AN)(D;;0xf0007;;;BG)(A;;0xf0007;;;SY)(A;;0x7;;;BA)(A;;0x7;;;SO)(A;;0x3;;;IU)(A;;0x3;;;SU)(A;;0x3;;;S-1-5-3)"

"AutoBackupLogFiles"=dword:00000000

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Eventlog\eConnect\eConnect]

"EventMessageFile"="c:\\WINDOWS\\Microsoft.NET\\Framework\\v1.1.4322\\EventLogMessages.dll"

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Eventlog\eConnect\Microsoft.GreatPlains.eConnect]

"EventMessageFile"="c:\\WINDOWS\\Microsoft.NET\\Framework\\v1.1.4322\\EventLogMessages.dll"

Note that you need to have the EventLogMessages.dll file located at C:\WINDOWS\Microsoft.NET\Framework\v1.1.4322\EventLogMessages.dll.

Like magic, the registry access error went away, and I was able to get the actual eConnect error for the data I was trying to import.

This was with eConnect 9, so this resolution is probably pretty dated, and presumably this is a pretty rare server-specific problem, but in case anyone else runs into it, this approach seemed to shortcut the troubleshooting process and let me jump right to a simple resolution.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Wednesday, October 20, 2010

Consultant Tools Series: Windows Grep (WinGrep)

Yesterday I received an e-mail from a client saying that they were seeing duplicate journal entry lines in Dynamics GP for JEs that had been imported from an external system.

Knowing how my eConnect JE import was developed, I was pretty confident that my integration wasn't inadvertently importing JE lines twice, and the client found that only certain JEs were being duplicated. When I looked at the JEs in Dynamics GP, I saw that they were nearly identical, but the DR and CR descriptions were slightly different in the "real" JE vs. the "duplicate" JE. That let me to believe that the external system was potentially sending some duplicate data to GP.

With this in mind, I chose two duplicate JEs out of Dynamics GP from September and documented them. Now I just had to confirm what the external system sent for those JEs.

Since I always archive a copy of all source data files for my integrations, I jumped over to the archive directory and extracted all 2,115 JE files for the month of September. The files are CSV files, but have a .dat extension. I first tried to use the Windows Server 2008 search feature that is built into Windows Explorer. I typed in the unique ID for one of the JEs I had documented, but received no results. Figuring that Windows wouldn't search the .dat file extensions, I renamed all of the files to *.txt and tried again. No search results. I was sure that the data that I was searching for was present in one of the files, but the Windows Search feature just wasn't doing the job.

I even tried copying the files over to my Windows 7 workstation, which I have had some success with, but still no results.

So I did some Googling for full text file searching in Windows, and one solution was constantly referenced in the results: Windows Grep, or WinGrep.

Windows Grep is a shareware application that implements the functionality of Unix grep in an easy to use Windows application. I downloaded Windows Grep, pointed it to my directory with over 2,000 .dat files, and told it to search for my unique JE ID. In a few seconds, it found the file that I needed. When I opened the file, sure enough, it contained two JEs.

I was able to quickly find the source data files for the two JEs that I had documented, and send everything to the vendor that maintained the external system. They then discovered that a cross join was being performed during their export, resulting in duplicate JEs being exported.

I'm guessing I only used 0.1% of the functionality of Windows Grep, and it has many additional features that sound interesting, so I'm impressed with it so far, and will try and see if I can figure out any other situations where it may be useful. For $30, it is a bargain if you need its powerful search (and replace) functionality.

Update: Reader Stanley Glass has posted a comment suggesting the free Agent Ransack utility, developed by Mythicsoft out of Oxford England, for searching files. It also appears to have a comprehensive feature set, so now I have two tools to consider for these types of searches!

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Knowing how my eConnect JE import was developed, I was pretty confident that my integration wasn't inadvertently importing JE lines twice, and the client found that only certain JEs were being duplicated. When I looked at the JEs in Dynamics GP, I saw that they were nearly identical, but the DR and CR descriptions were slightly different in the "real" JE vs. the "duplicate" JE. That let me to believe that the external system was potentially sending some duplicate data to GP.

With this in mind, I chose two duplicate JEs out of Dynamics GP from September and documented them. Now I just had to confirm what the external system sent for those JEs.

Since I always archive a copy of all source data files for my integrations, I jumped over to the archive directory and extracted all 2,115 JE files for the month of September. The files are CSV files, but have a .dat extension. I first tried to use the Windows Server 2008 search feature that is built into Windows Explorer. I typed in the unique ID for one of the JEs I had documented, but received no results. Figuring that Windows wouldn't search the .dat file extensions, I renamed all of the files to *.txt and tried again. No search results. I was sure that the data that I was searching for was present in one of the files, but the Windows Search feature just wasn't doing the job.

I even tried copying the files over to my Windows 7 workstation, which I have had some success with, but still no results.

So I did some Googling for full text file searching in Windows, and one solution was constantly referenced in the results: Windows Grep, or WinGrep.

Windows Grep is a shareware application that implements the functionality of Unix grep in an easy to use Windows application. I downloaded Windows Grep, pointed it to my directory with over 2,000 .dat files, and told it to search for my unique JE ID. In a few seconds, it found the file that I needed. When I opened the file, sure enough, it contained two JEs.

I was able to quickly find the source data files for the two JEs that I had documented, and send everything to the vendor that maintained the external system. They then discovered that a cross join was being performed during their export, resulting in duplicate JEs being exported.

I'm guessing I only used 0.1% of the functionality of Windows Grep, and it has many additional features that sound interesting, so I'm impressed with it so far, and will try and see if I can figure out any other situations where it may be useful. For $30, it is a bargain if you need its powerful search (and replace) functionality.

Update: Reader Stanley Glass has posted a comment suggesting the free Agent Ransack utility, developed by Mythicsoft out of Oxford England, for searching files. It also appears to have a comprehensive feature set, so now I have two tools to consider for these types of searches!

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Monday, October 18, 2010

Excel Tip: Count the Number of Occurrences of a Single Character in One Cell

I am currently helping a partner with a conversion from QuickBooks to Dynamics GP--specifically to migrate QuickBooks transaction Class data into GP Analytical Accounting transactions. For various reasons, standard, and even non-standard migration tools have been unable to convert the QuickBooks data, so we've been forced to export the data and import it into GP.

The eConnect integration I developed works great, but one of the annoyances has been preparing a QuickBooks export file for import to GP. If you are familiar with QuickBooks exports, you know that when data is exported to Excel, it typically looks quite presentable, but is not exactly import-friendly.

While trying to map the QuickBooks chart of accounts to the GP chart of accounts, I had to determine how many "levels" the QuickBooks account had. So for instance, there might be Account: Sub-Account: Department: Location: Expense: Sub-Expense. Out of the pile of QB accounts, I needed to determine the maximum number of "levels" the accounts had. Since there is a colon between each level, I just needed to count the number of colons in the cell, and then add one. Simple!

But I am pretty sure I've never had to count the number of occurrences of a value in a single cell. I've used the SEARCH function to determine whether a value exists at all, but never had to count the occurrences of that value.

With Google to the rescue, I stumbled across a Microsoft Support article that provided several examples of how to count the occurrences of a string in an Excel file.

http://support.microsoft.com/kb/213889

And here is the formula for counting the number of occurrences of a character in a cell:

=LEN(cell_ref)-LEN(SUBSTITUTE(cell_ref,"a",""))

Pretty darn smart. Maybe, on a good day, with enough sleep, I could have come up with something that elegant, but I am pretty sure that article saved me alot of time.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

The eConnect integration I developed works great, but one of the annoyances has been preparing a QuickBooks export file for import to GP. If you are familiar with QuickBooks exports, you know that when data is exported to Excel, it typically looks quite presentable, but is not exactly import-friendly.

While trying to map the QuickBooks chart of accounts to the GP chart of accounts, I had to determine how many "levels" the QuickBooks account had. So for instance, there might be Account: Sub-Account: Department: Location: Expense: Sub-Expense. Out of the pile of QB accounts, I needed to determine the maximum number of "levels" the accounts had. Since there is a colon between each level, I just needed to count the number of colons in the cell, and then add one. Simple!

But I am pretty sure I've never had to count the number of occurrences of a value in a single cell. I've used the SEARCH function to determine whether a value exists at all, but never had to count the occurrences of that value.

With Google to the rescue, I stumbled across a Microsoft Support article that provided several examples of how to count the occurrences of a string in an Excel file.

http://support.microsoft.com/kb/213889

And here is the formula for counting the number of occurrences of a character in a cell:

=LEN(cell_ref)-LEN(SUBSTITUTE(cell_ref,"a",""))

Pretty darn smart. Maybe, on a good day, with enough sleep, I could have come up with something that elegant, but I am pretty sure that article saved me alot of time.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Friday, October 15, 2010

How Not to Design an Integration: Interface Follies

I've been working on a few different Dynamics GP integrations lately that seem to have a common theme. The Dynamics GP side of things is fine, but it's the external systems that are sending data to, or receiving data from Dynamics GP that have some glaring limitations. I understand that all software applications have limitations or are sometimes tasked to do things for which they weren't designed, but the issues I've run into recently seem to be pretty obvious limitations in the applications' interfaces--something that I actually don't run into very often.

So here are some lessons on how not to design an integration.

The first is an import from a widely used HR and payroll system to GP. I was asked to import employees from a CSV file into Dynamics GP. No problem, relatively straightforward in concept. We worked with the client to design the interface, and one of the questions we had was "How often do you want the employee information imported into Dynamics GP?" Because they wanted the ability to setup a new employee in GP on short notice, they asked that the import run at least hourly. Great, not a problem. We documented this requirement and moved on to other topics. Well, when we shared the requirements with the HR system vendor, we were told that the hourly import requirement was not possible. What? We aren't asking for near real-time, just hourly. We were told that the export from the HR system could only be run once per day. Period. End of conversation.

Well, from what I can glean, it seems that the HR system wasn't really designed to export new or changed data. The vendor had figured out a way to do it using extra tables to maintain copies of the employee table that were compared to the live table, but my understanding is that the design was based on a date stamp field. Not a date time stamp--just a date stamp. Being a GP developer, I'm not about to throw stones at any system that only uses a date stamp in their database tables (cough, cough, GP, cough--although GP 10 and 2010 are starting to sneak time stamps into some tables). But to design an export process that can only export once per day? What were they thinking? So, we're stuck with a once-per-day integration, at least until the client complains enough to see if the HR vendor will change their export routine.

The next integration involved exporting purchase orders from Dynamics GP and sending them to a third party logistics warehouse where the orders would be received. I had already developed the PO export with both e-mail and FTP capability--we were just adding a new warehouse into the mix. We prepared a sample PO export file and dropped it on their FTP site. They responded that our test file was invalid because it didn't comply with their undocumented file naming convention. Okay, no problem. Except here is the file naming convention:

AmmddssXYZ.CSV

The letter "A" represents a fixed prefix, and the XYZ is the fixed suffix--no problem. And mmdd is the month and day. But ss? We were told that ss represents a sequence number. And the file names had to be unique--a file name could not be repeated during a given day. So, we could either increment a sequence number, or we could use a two digit hour. Which means that we would be limited to exporting no more than once per hour, or even if we used a sequence, the client would not to be able to send more than 99 files per day. This GP site generates millions of sales and purchasing transactions per year, so 99 transactions per day in any category is a very small number. Fortunately, because these are purchase orders that won't need to be received immediately by the warehouse, hourly exports were okay. But in this day and age, a limit of 99 was surprising to see, especially since the limit only exists because of a two character limit within a file name.

The next one felt like a computing time warp. After submitting the same PO files to the warehouse for testing, we were told that our file "failed and caused a hard fault". An innocent little CSV file caused a "hard fault"? Should I even ask what that means? Is there such a thing as a "soft fault"? So, what caused this hard fault? A comma. Wait, a comma in a CSV file caused a failure? Yessiree. It wasn't a comma delimiter that caused the problem, it was a comma within a field value that cause the problem. It was a vendor name like "Company, Inc." Even though my CSV file had quotation marks around the value, the vendor's system was not designed to parse quotation marks in the CSV file, and is unable to handle commas that are not delimiters. So, in this vendors world, they apparently don't have any commas in their system--just pop that key off of the keyboard. I'm guessing that with some ingenuity, even a 1970s era mainframe could handle commas within quoted field values. But not this import routine. So I had to modify my standard CSV export routine to eliminate quotation marks and also remove commas from all field values in the CSV file. Brilliant.

If you are developing an internal integration that has very limited functionality, maybe these types of design limitations might be acceptable or go unnoticed, but both of these vendors have systems that have to interact with other external software applications. To not add that time stamp, to not add a third character to the file sequence number, and to not bother to parse quoted CSV field values just seems like poor integration design. Or negligent laziness.

On the other hand, I have a story about an interface that was reasonably designed, but still ran into issues. A Dynamics GP ISV solution was setup to export transactions to CSV files, which were then imported by an external system. The developer used a file naming convention that included year, month, day, hour, minute, and second--perfectly reasonable given the requirements and design assumptions that were provided. The integration worked fine--for a while. But after a few months, the client's volume increased to the point where so many transactions were being processed so frequently that files were being overwritten several times within a one second period. We realized that it isn't often that a typical business system needs to have a file naming convention that includes milliseconds, but for various reasons, this was one such situation. I forget the details, but I believe for some reason it wasn't practical to include milliseconds in the file name, or perhaps even the milliseconds were not good enough, so we ended up generating a random number that would be appended as a suffix on the file name. Even if 20 files were generated per second, or 20 in the same millisecond, it was unlikely that two would happen to get the same random suffix of "273056". Some times you just have to get creative.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

So here are some lessons on how not to design an integration.

The first is an import from a widely used HR and payroll system to GP. I was asked to import employees from a CSV file into Dynamics GP. No problem, relatively straightforward in concept. We worked with the client to design the interface, and one of the questions we had was "How often do you want the employee information imported into Dynamics GP?" Because they wanted the ability to setup a new employee in GP on short notice, they asked that the import run at least hourly. Great, not a problem. We documented this requirement and moved on to other topics. Well, when we shared the requirements with the HR system vendor, we were told that the hourly import requirement was not possible. What? We aren't asking for near real-time, just hourly. We were told that the export from the HR system could only be run once per day. Period. End of conversation.

Well, from what I can glean, it seems that the HR system wasn't really designed to export new or changed data. The vendor had figured out a way to do it using extra tables to maintain copies of the employee table that were compared to the live table, but my understanding is that the design was based on a date stamp field. Not a date time stamp--just a date stamp. Being a GP developer, I'm not about to throw stones at any system that only uses a date stamp in their database tables (cough, cough, GP, cough--although GP 10 and 2010 are starting to sneak time stamps into some tables). But to design an export process that can only export once per day? What were they thinking? So, we're stuck with a once-per-day integration, at least until the client complains enough to see if the HR vendor will change their export routine.

The next integration involved exporting purchase orders from Dynamics GP and sending them to a third party logistics warehouse where the orders would be received. I had already developed the PO export with both e-mail and FTP capability--we were just adding a new warehouse into the mix. We prepared a sample PO export file and dropped it on their FTP site. They responded that our test file was invalid because it didn't comply with their undocumented file naming convention. Okay, no problem. Except here is the file naming convention:

AmmddssXYZ.CSV

The letter "A" represents a fixed prefix, and the XYZ is the fixed suffix--no problem. And mmdd is the month and day. But ss? We were told that ss represents a sequence number. And the file names had to be unique--a file name could not be repeated during a given day. So, we could either increment a sequence number, or we could use a two digit hour. Which means that we would be limited to exporting no more than once per hour, or even if we used a sequence, the client would not to be able to send more than 99 files per day. This GP site generates millions of sales and purchasing transactions per year, so 99 transactions per day in any category is a very small number. Fortunately, because these are purchase orders that won't need to be received immediately by the warehouse, hourly exports were okay. But in this day and age, a limit of 99 was surprising to see, especially since the limit only exists because of a two character limit within a file name.

The next one felt like a computing time warp. After submitting the same PO files to the warehouse for testing, we were told that our file "failed and caused a hard fault". An innocent little CSV file caused a "hard fault"? Should I even ask what that means? Is there such a thing as a "soft fault"? So, what caused this hard fault? A comma. Wait, a comma in a CSV file caused a failure? Yessiree. It wasn't a comma delimiter that caused the problem, it was a comma within a field value that cause the problem. It was a vendor name like "Company, Inc." Even though my CSV file had quotation marks around the value, the vendor's system was not designed to parse quotation marks in the CSV file, and is unable to handle commas that are not delimiters. So, in this vendors world, they apparently don't have any commas in their system--just pop that key off of the keyboard. I'm guessing that with some ingenuity, even a 1970s era mainframe could handle commas within quoted field values. But not this import routine. So I had to modify my standard CSV export routine to eliminate quotation marks and also remove commas from all field values in the CSV file. Brilliant.

If you are developing an internal integration that has very limited functionality, maybe these types of design limitations might be acceptable or go unnoticed, but both of these vendors have systems that have to interact with other external software applications. To not add that time stamp, to not add a third character to the file sequence number, and to not bother to parse quoted CSV field values just seems like poor integration design. Or negligent laziness.

On the other hand, I have a story about an interface that was reasonably designed, but still ran into issues. A Dynamics GP ISV solution was setup to export transactions to CSV files, which were then imported by an external system. The developer used a file naming convention that included year, month, day, hour, minute, and second--perfectly reasonable given the requirements and design assumptions that were provided. The integration worked fine--for a while. But after a few months, the client's volume increased to the point where so many transactions were being processed so frequently that files were being overwritten several times within a one second period. We realized that it isn't often that a typical business system needs to have a file naming convention that includes milliseconds, but for various reasons, this was one such situation. I forget the details, but I believe for some reason it wasn't practical to include milliseconds in the file name, or perhaps even the milliseconds were not good enough, so we ended up generating a random number that would be appended as a suffix on the file name. Even if 20 files were generated per second, or 20 in the same millisecond, it was unlikely that two would happen to get the same random suffix of "273056". Some times you just have to get creative.

Steve Endow is a Dynamics GP Certified Trainer and Dynamics GP Certified Professional. He is also the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

http://www.precipioservices.com

Monday, October 11, 2010

Attend the Decisions Fall 2010 Virtual Conference!

The folks over at MS Dynamics World have been very busy planning and organizing the upcoming Decisions Fall 2010 virtual conference.

The Dynamics GP day of the conference is Tuesday, November 2nd, so make sure to register and put it on your calendar! The conference is free to attend thanks to the great firms who have helped sponsor the event.

Despite the low price, there will be a ton of great content delivered by the top Dynamics GP luminaries, such as Mark Polino, author of the new Dynamics GP 2010 Cookbook, Jivtesh Singh, Leslie Vail, and Michael Johnson. There will also be some top Microsoft Dynamics team members like Mike Ehrengberg, Guy Weismantel, and Bill Patterson presenting.

I was also graciously invited to be a presenter, so if you want to learn more about how you can use the Dynamics GP Developer's Toolkit to get more value out of Dynamics GP, make sure to attend my Tailoring Microsoft Dynamics GP presentation where I'll discuss several examples of how I have helped clients improve their efficiency, reduce costs, and produce competitive advantage by customizing Dynamics GP.

See you there!

The Dynamics GP day of the conference is Tuesday, November 2nd, so make sure to register and put it on your calendar! The conference is free to attend thanks to the great firms who have helped sponsor the event.

Despite the low price, there will be a ton of great content delivered by the top Dynamics GP luminaries, such as Mark Polino, author of the new Dynamics GP 2010 Cookbook, Jivtesh Singh, Leslie Vail, and Michael Johnson. There will also be some top Microsoft Dynamics team members like Mike Ehrengberg, Guy Weismantel, and Bill Patterson presenting.

I was also graciously invited to be a presenter, so if you want to learn more about how you can use the Dynamics GP Developer's Toolkit to get more value out of Dynamics GP, make sure to attend my Tailoring Microsoft Dynamics GP presentation where I'll discuss several examples of how I have helped clients improve their efficiency, reduce costs, and produce competitive advantage by customizing Dynamics GP.

See you there!

Monday, October 4, 2010

Sure Step Certified, or Certifiable?

I just wanted to share a great blog post by a fellow Sure Step trainer in Europe. Some great hints on prepping for the Sure Step exam if you haven't already passed it :)

Also, I encourage you to read the comments on the blog as he has some great responses to common complaints/issues with Sure Step that I hear in classes and conversations. As always, I think we all have room for improvement in our approaches and Sure Step has a variety of tools to assist you. I tell students in all of my classes that you shouldn't feel pressure to adopt ALL of Sure Step at one time, but to look critically at your organization and find the areas that you could improve. Then look to the guidance in Sure Step to help you. Not to be sappy, but in the end, we all want happy customers right?

Trouble with proposals being "off" from the real project? Look to the Diagnostic phase guidance, including Decision Accelerators to reduce your risk and increase the customer's confidence in your solution.

Development projects don't seem to meet the customer's needs the first time around? Look to the design document guidance in the Design phase in Sure Step.

Yadda, yadda, yadda. Give it a chance with an open mind, and you might be pleasantly surprised :)

Also, I encourage you to read the comments on the blog as he has some great responses to common complaints/issues with Sure Step that I hear in classes and conversations. As always, I think we all have room for improvement in our approaches and Sure Step has a variety of tools to assist you. I tell students in all of my classes that you shouldn't feel pressure to adopt ALL of Sure Step at one time, but to look critically at your organization and find the areas that you could improve. Then look to the guidance in Sure Step to help you. Not to be sappy, but in the end, we all want happy customers right?

Trouble with proposals being "off" from the real project? Look to the Diagnostic phase guidance, including Decision Accelerators to reduce your risk and increase the customer's confidence in your solution.

Development projects don't seem to meet the customer's needs the first time around? Look to the design document guidance in the Design phase in Sure Step.

Yadda, yadda, yadda. Give it a chance with an open mind, and you might be pleasantly surprised :)