My blog has moved! Please visit the new blog at: https://blog.steveendow.com/ I will no longer be posting to Dynamics GP Land, and all new posts will be at https://blog.steveendow.com Thanks!

Wednesday, December 30, 2009

Display VS Tools Assembly Version

Recently I've been buried writing a VS Tools AddIn that has been a bit more complex than any previous AddIn that I've had to develop. Not terribly complex, just more involved than the typical AddIn widget that I write frequently. It's basically like a mini-module: A custom window, with a custom GP menu item, its own custom table, and lookups that query from GP tables.

And since the client uses C# internally, I've been transitioning to C# as well, so it's only the second project that I've done in C#.

Anyway, one of the things that I like to have on any user interface application that I develop is a visible build number. I have never used Help -> About dialogs (although maybe I should), so I just prefer to use a label or status strip to discretely show the app version.

Well, with VS Tools AddIns, this is a bit tricky.

There are at least 3 different ways that I've found to try and get the version number of your .NET application. Which approach you use depends on the type of app you've written (WinForm vs. non-WinForm, and other subtleties that I don't fully understand). With a VSTools AddIn, the 3 approaches produce different results, only one of which is truly the Assembly Version of my DLL.

One approach (Application.ProductVersion) displayed 10.0.0357 (or something like that), as it was apparently referencing some GP component that was invoking my DLL.

Another (System.Reflection.Assembly.GetCallingAssembly) simply displayed 2.0.0.0. I'm not entirely sure what that was referring to, but from what I could tell, it might have been a base .NET assembly version, like Windows.Forms.

And finally, #3, the winner, produced the version info I was looking for. After googling through several results, I found it on this web page in VB syntax.

Having taken the C# plunge, I ended up writing it this way:

StringBuilder buildNumber = new StringBuilder("Build: "); buildNumber.Append(System.Diagnostics.FileVersionInfo.GetVersionInfo(System.Reflection.Assembly.GetExecutingAssembly().Location).FileMajorPart);

buildNumber.Append(".");

buildNumber.Append(System.Diagnostics.FileVersionInfo.GetVersionInfo(System.Reflection.Assembly.GetExecutingAssembly().Location).FileMinorPart);

buildNumber.Append(".");

buildNumber.Append(System.Diagnostics.FileVersionInfo.GetVersionInfo(System.Reflection.Assembly.GetExecutingAssembly().Location).FileBuildPart);

buildNumber.Append(".");

buildNumber.Append(System.Diagnostics.FileVersionInfo.GetVersionInfo(System.Reflection.Assembly.GetExecutingAssembly().Location).FilePrivatePart);

lblBuild.Text = buildNumber.ToString();

There are probably a few other ways to do it, and probably more elegant ways of assembling the string, but I had a deadline, so this was good enough for now.

Over the next week I will try and share some tips and tricks that I used to get around some VS Tools limitations and a VS Tools bug.

Tuesday, December 29, 2009

Dynamics GP Custom VBA Lookups

Clients want to utilize user defined or custom fields, but they want to have the values validated, and they want to allow the user to select a valid value from a list. Makes sense.

I briefly checked out the drop down and list box controls available in Modifier, but they are pretty limited, and aren't really oriented towards displaying dynamic result sets from a database query.

So I ventured out and created my own custom lookup window in VBA.

As a side note, my biggest gripe with the standard GP lookup windows is their lack of a simple, "real-time" search field. By "real-time", I mean that I want the lookup window to instantly filter the displayed records based on what I type in the lookup search box--as I type it. And I want to have the window simultaneously search multiple fields at the same time--not just one field. For standard GP lookup fields, Rockton SmartFill does an excellent job in this regard, but sadly, in my experience, few clients have been willing to pay for SmartFill.

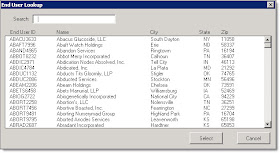

I'm going to show an example of a lookup that displays Sales Prospect records. In this case, the client called prospects "End Users", so it is called the "End User Lookup" window.

I first created a custom User Form in VBA, and added a Search field at the top. Because VBA is pretty limited in terms of controls, I had to use a ListBox to display my results. Finally, I have a Select and Cancel button.

When the form loads, I utilize UserInfoGet to have it query the GP company database and retrieve all prospects. I store those prospects in a client-side disconnected ADO recordset so that they remain in memory. I then have a simple procedure that displays the recordset results.

When a user types in the Search box, I send the equivalent of a SQL WHERE clause to the ADO recordset Filter property. This dynamically changes the records available in the recordset, allowing me to quickly redisplay only matching records as the user types each character.

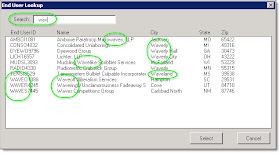

As you can see in this example, when I type "wav" in the search box, I am simultaneously searching on the ID, name, and city of the prospect records, narrowing my list down from 5,000 records to 10 records as fast as I can type the three letters. The search results change almost instantaneously as I type each character.

At first I was concerned that this type of dynamic, real-time searching would run into performance issues. But, to my surprise, it's very responsive. One reason is that as the user types each character in the search box, the number of records that meet the search criteria and need to be displayed gets smaller and smaller. The ADO Filter property is so fast that the search itself is instantaneous, leaving only the work of displaying the resulting records. So even if I started with 10,000 records, after I type just one word, I will have quickly narrowed down my result set to a much smaller number of records that can be displayed quickly in the listbox.

There are a few minor limitations with this solution, both of which are imposed by VBA. First, because I am limited to a list box instead of a ListView or Grid, I chose to use static lables for column headers, and fixed columns, and have therefore decided to create a separate user form for each lookup window that I need. You could invest some time to add code to make a single user form work for multiple lookups, but since I have only needed one or two for any given client, I didn't bother.

The second limitation is that the list box control doesn't have a sorting feature. Based on my experience, because the lookup is only used to select one record, and because the Search feature is so flexible and fast, there was never a requirement to sort records. But if you needed to sort, you could add some buttons at the top of each column and then use the ADO recordset Sort property.

Based on this VBA lookup, I have subsequently created a similar .NET class that I use for lookups with the VS Tools AddIns that I have been working on. For that class, I did spend the time to design it to work for multiple look up types, so I can now use it easily to lookup customers, addresses, inventory items, sales orders, and even records in custom tables. It also has a dynamic real-time search capability, but also has the benefit of better controls and capabilities offered by .NET. If anyone is interested in that, let me know and I'll try and find the time to create another post discussing it.

Below is the code behind the VBA User Form.

Private rsProspects As New ADODB.Recordset

Private Sub cmdCancel_Click()

Me.Hide

End Sub

Private Sub cmdSelect_Click()

CustomerAddressMaintenance.EndUserID.Value = ProspectList.Value

Me.Hide

End Sub

Private Sub ProspectList_DblClick(ByVal Cancel As MSForms.ReturnBoolean)

If CustomerAddressMaintenance.IsLoaded Then

CustomerAddressMaintenance.EndUserID.Value = ProspectList.Value

ElseIf SalesUserDefinedFieldsEnt.IsLoaded Then

SalesUserDefinedFieldsEnt.UserDefined3.Value = ProspectList.Value

End If

Me.Hide

End Sub

Private Sub SearchFilter_Change()

Dim strFilter As String

strFilter = Trim(SearchFilter.Value)

If Trim(strFilter) <> "" Then

rsProspects.Filter = "CUSTNAME LIKE '%" & strFilter & "%' OR CITY LIKE '%" & strFilter & "%'"

Else

rsProspects.Filter = adFilterNone

End If

Call PopulateProspectList

End Sub

Private Sub UserForm_Activate()

SearchFilter.Value = ""

rsProspects.Filter = adFilterNone

Call QueryProspects

Call PopulateProspectList

SearchFilter.SetFocus

End Sub

Private Sub UserForm_Initialize()

ProspectList.ColumnCount = 5

ProspectList.ColumnWidths = "80;160;90;30;80"

End Sub

Private Sub PopulateProspectList()

lngRecords = rsProspects.recordCount

If lngRecords > 0 Then

ReDim strRecords(lngRecords - 1, 4)

recordCount = 0

While Not rsProspects.EOF

strRecords(recordCount, 0) = rsProspects.Fields("PROSPID").Value

strRecords(recordCount, 1) = rsProspects.Fields("CUSTNAME").Value

strRecords(recordCount, 2) = rsProspects.Fields("CITY").Value

strRecords(recordCount, 3) = rsProspects.Fields("STATE").Value

strRecords(recordCount, 4) = rsProspects.Fields("ZIP").Value

recordCount = recordCount + 1

rsProspects.MoveNext

Wend

ProspectList.List() = strRecords

Else

ProspectList.Clear

End If

End Sub

Private Sub QueryProspects()

'rsProspects.State

'0 = Closed

'1 = Opened

If rsProspects.State = 1 Then

rsProspects.Close

End If

Dim oConn As ADODB.Connection

Dim strSQL As String

Dim lngRecords As Long

Dim recordCount As Long

Dim strRecords() As String

Set oConn = UserInfoGet.CreateADOConnection

oConn.DefaultDatabase = UserInfoGet.IntercompanyID

oConn.CursorLocation = adUseClient

rsProspects.CursorLocation = adUseClient

strSQL = "SELECT RTRIM(PROSPID) AS PROSPID, RTRIM(CUSTNAME) AS CUSTNAME, RTRIM(CITY) AS CITY, RTRIM(STATE) AS STATE, RTRIM(ZIP) AS ZIP FROM SOP00200 ORDER BY PROSPID"

Set rsProspects = oConn.Execute(strSQL)

rsProspects.ActiveConnection = Nothing

oConn.Close

End Sub

Integration Migration Frustration

I looked at the integrations and saw that they were relatively simple. They read from a few SQL tables in a staging database on the GP SQL Server, and only had a few simple scripts to call stored procedures to prepare the data prior to import by IM.

Pretty simple, right? I documented my findings and declared that the upgrade should have no impact on the integrations.

A month later, and many projects later, I completely forgot the details of the review that I performed. And in the meantime, the client discovered that their old SQL server was underpowered and that they would need to install GP 10 on a new SQL server machine. I didn't get that particular memo, so I was oblivious to this new detail.

On the scheduled date, the partner performed the upgrade to GP 10 on the new server, and all was swell. Except until the client tried to run their integrations.

Integration Manager complained that the source table could not be found. Hmmm. That's odd. And remember at this point, I didn't realize that a new SQL server had been installed.

When I checked the table list, a bunch of random objects were listed--none of which looked like a staging table. The integrations were using a DSN within IM, so when I checked the DSN, I found the problem. By default, DSNs created in IM do not have the database name set in the connection string. Although a new DSN had been setup to point to the new SQL server, the staging database was not listed. So I added the "Database=GPImport" parameter to the connection string, and viola, the appropriate tables appeared in the Data Source table list.

Problem solved! Right? Not so quick. IM showed that there was no data in the Employee table. And all of the invoices in the invoice table had already been imported into GP. Umm.....

Funny thing about integrations: The source data doesn't just materialize magically. Something or someone needs to prepare or make the data available for IM. Well, let's just say that I neglected to dig deep enough in that direction when I performed my initial assessment.

I saw that the integration was reading from a SQL staging table, and that table would not be affected by the upgrade to GP 10, so no worries. And the stored procedure that was being called in the Before Integration script looked good, and would also not be affected by the GP 10 upgrade.

But, what I didn't consider was what would happen if GP was moved to a new SQL server. At the time I, the partner, and the client, didn't know they would be using a new SQL server, so we didn't discuss the issues such a move would cause.

Naturally, it caused issues, and lots of them.

If you've ever heard of a Rube Goldberg machine, you'll appreciate this particular integration.

It turns out that multiple static HTML intranet web pages, circa 1994, on a separate web server, were using IDC and HTX files to call SQL stored procedures. Never heard of IDC and HTX files? Neither had I, since I started my data-driven web development with ASP 1.0. So the IDC file has a DSN, login info, and a SQL command, which was a stored procedure. When the user accesses these IDC files via IIS, the stored procedure is called.

Sounds simple, right? Well, that stored procedure calls several other stored procedures. Those stored procedures query the GP database and join to a linked SQL server to query data and fill cursors which ultimately populate data in staging tables. But wait, there's more! The stored procedures also manually build and write HTML report files to the SQL server local disk! We're talking hundreds and hundreds of lines of SQL in over a dozen stored procedures. Wait, don't touch that dial! They also use the xp_cmdshell extended stored procedure to map network drives back to the intranet web server and then copy the HTML files to the web server! Yessiree, a stored procedure to map network drives and copy files!

If you aren't scared yet, you are truly a stoic.

Thankfully the IDC files all referenced the same DSN, so I was able to change the DSN on the intranet server to point to the new GP SQL Server. And then, of course, I had to setup a linked server on the new GP SQL server. Whew, all done, right?

Nope. It turns out that the BeforeIntegration scripts in IM had hard-coded references to the old GP SQL server, and did not use RetrieveGlobals. So those scripts had to be updated as well (it was much faster to just change the server name, so I didn't bother to implement the GPConnection object in IM 10).

What was really fun was trying to figure all of this out without any documentation. Also, the integration had been developed and modified extensively by at least two different firms over the course of the last FOURTEEN YEARS, so there were pieces of code all over the place. I had to search for clues just to figure out what I should be searching for as I dug through files and settings on three different servers.

As of today, I think I finally have all of the integrations working. I didn't dare touch a line of code, lets the entire house of cards collapse, so I ended up just having to find all of the database connection settings and change them appropriately.

The real lesson was that although I performed an initial assessment of the integrations prior to the upgrade, I clearly wasn't thorough enough, and made several implicit assumptions--the biggest of which was that GP 10 would be installed on the same server.

So after going through this experience, I created an "integration review template" to help me try and detect some of these issues in advance next time. It's by no means complete, but it has several questions that I could think of that migth have helped me better anticipate some of the issues that I ran into by at least understanding the complexity of the processes that generate the source data for Integration Manager.

I've posted it for download in case anyone is interested.

Please, integrate safely.

Thursday, December 24, 2009

Table Import is not always the ugly stepsister

For the past few weeks, I have been working on an implementation that goes live Monday 12/28. We have had a fairly tight timeframe, so I have been trying to avoid manual data entry whenever possible. However, I have run up against more than a few things that I needed to import but could not do so with Integration Manager. Table import to the rescue with imports in to some simple tables like...

- Direct Deposit Header and Lines for Payroll

- Shipping Methods

- Customer Items

All of these table structures are fairly friendly to table import, and result in simple file uploads. Shipping methods even came up late in the game, and it took less than 10 minutes to build and load the file.

So why am I writing about this? Well, I know I could use the reminder that older tools that I regularly dismiss can actually come in quite handy :)

Please share any of your table import success stories, and I will be happy to update the post to include.

A few other uses courtesy of Steve Chapman and Frank Harnelly:

- High volume imports even if the destinations are available in Integration Manager, like Customers (1,000,000+ records in minutes) or Chart of Accounts

- Fixed Assets General Info

SmartList Builder and SQL Tables/Views

- Created two views in SQL based on a combination of GP and custom tables

- Granted access to the views for SmartList Builder, Tools>>SmartList Builder>>Security>>SQL Table Security

- Created my Smartlist, Tools>>Smartlist Builder>>Smartlist Builder

- Tested by Smartlist, all looked good

- Deployed views to client site

- Exported Smartlist Builder to XML

- Imported to client site

- Granted access to the views for SmartList Builder at the site

- Error when viewing Smartlist, "You do not have security privileges to view all of the tables used in this SmartList"

- Follow steps in KB Article #967731 to configure a Security Task and Security Role that includes the operation Smarlist Builder\Smartlist Builder Permissions\Smartlist Builder\View Smartlists with SQL Tables

- Still get error

- Grant DYNGRP SELECT Permissions on both views

- Still get error

- Run Grant.SQL

- Still get error

Ugh. And the error happened for both regular users and sa. So what was the issue? Well, you have to have the permissions set BEFORE you import the XML file. So make sure you have those steps completed before you do your import, I did not narrow it down but I would think steps 8, 12, and 14 would be important. But I would do 8, 10, 12, and 14 just to be safe.

Once I had the permissions set, and then imported the XML file, all was good :) It seems that it does not recognize the permissions fully if they are set afterwards.

Happy holidays to all!

Tuesday, December 8, 2009

Don't you go closing my year...

I have to wait until I have ALL my adjusting entries before I can close the GL

This is the biggest myth of all. You can actually have your cake and eat it too. GP allows you to post to the most recent historical year. So, for example, if I close 2009 I can still post adjusting entries to it. I just can no longer post adjustments to 2008. The two requirements to post to the most recent historical year are that you must mark "allow posting to history" in GL setup (Tools>>Setup>>Financial>>General Ledger) and the fiscal period must be open in Fiscal Period Setup (Tools>>Setup>>Company>>Fiscal Periods).

I am running my general ledger year end close, and it is stuck

I promise you the odds are that it is not stuck. The year end close is a pretty intensive process, so it is fairly common for a workstation to show as not responding. Please be patient. Using Ctrl-Alt-Delete to cancel out of the process is NOT recommended, and will REQUIRE you to restore a backup. And if you don't have a backup, well, that is just not fun...and will involve a nice little database fixing service from Professional Services at Microsoft. So, two lessons: have a backup and be patient.

I can view all my audit entries because I set up an audit period

This is a popular trick, to set up a 13th period in your Fiscal Period setup with the same date range as the December period. You then close December, and with the 13th audit period open, transactions will post in to that audit period. Sounds brilliant right? Well, it is...as long as you never run financial reconcile (Tools>>Utilities>>Financial>>Reconcile) on that year. As it will reattribute the postings to the earliest period with the same start date (assuming both periods were closed when you ran reconcile) or all postings (including your original Dec postings) will be attributed to the open period (if the 13th is open, and the 12th is closed). Ugh. Better choice? Set up a source document code (Tools>>Setup>>Posting>>Source Document) called AJ for Adjusting Entries and select it whenever you enter an adjusting entry instead of GJ. Then use the Cross Reference by Source Document report to view your AJs for a time period (Reports>>Financial>>Cross Reference>>Source Document).

Fixed Assets year end is so easy, I just change the year on the book right?

Nooooo! You must run the fixed asset year end closing routine (Tools>>Routines>>Fixed Assets>>Year End) in order to close the year properly. Changing the year directly on the books themselves is not recommended and will require you to restore a backup if you process transactions in the new year if you do so. Also, keep in mind, Fixed Assets is one module where you must complete all activity for the prior year and close the year BEFORE you can process activity in the new year.

I can close Receivables and Payables whenever I get around to it

Unfortunately, the payables management and receivables management year end closes are not date sensitive. This means that you need to run them as close to the actual end of year (after posting as much of the prior year activity as possible, but before you have posted to the new year) to get the most accurate close as possible. Now, the good news is that these closes only impact the "Amounts Since Last Close" summary figures for current year -vs- last year that are tracked for reports, inquiries, and Smartlist. The close will move all posted transactions to last year (regardless of their actual date) for the summaries. And the current year bucket will start over with any transactions posted after the close, again regardless of their actual posting date.

For example, let's say that on 1/1/2010 I post an entry of $500 to payables and date it 1/1/2010. I then close the year for payables on 1/2/2010. That $500 will be moved to the last year column in the "Amounts Since Last Close" summary figures even though it was posted to the 1/1/2010 date simply because it was actually posted BEFORE the year end close. By that same logic, let's say on 1/3/2010 you enter another payables entry for $1000 and post it back to 12/20/2009. Since the close has already been performed, that $1000 will be tracked in the current year column for the "Amounts Since Last Close" summary figures.

For most folks, these amount to minor differences that they generally do not notice. However, keep in mind that if you are looking at a vendor or customer summary field in SmartList, it will be using the "Amounts Since Last Close" summary figures. And if you are looking at current year -vs- last year, there may be discrepancies for the actual detail.

Well, there is my mythbusting. Please share your own myths, and how you like to bust them :) Not sure how many more blog posts I will make before the little one arrives (January 7th is coming quick) so I will go ahead and wish everyone happy holidays!